MIDI: diferenças entre revisões

Adicionando tradução do artigo da wikipedia anglófona |

|||

| Linha 1: | Linha 1: | ||

{{Em tradução|en:MIDI}} |

|||

{{Sem-fontes|data=março de 2012| angola=| arte=| Brasil=| ciência=| geografia=| música=| Portugal=| sociedade=|1=|2=|3=|4=|5=|6=}} |

|||

{{Ver desambig| |Midi}} |

|||

== Mensagens MIDI == |

|||

General MIDI ou GM (Musical Instrument Digital Interface) é uma especificação para sintetizadores que impõe vários requisitos para além da norma MIDI mais geral. Enquanto que a norma MIDI proporciona um protocolo de comunicações que assegura que diferentes instrumentos (ou componentes) possam interagir a um nível básico (por ex., tocando uma nota num teclado MIDI vai fazer com que um módulo de som reproduza uma nota musical), o General MIDI vai mais além de duas maneiras: ele requer que todos os instrumentos compatíveis com o GM tenham um mínimo de especificações (tais como pelo menos 24 notas de polifonia) e associa certas interpretações a vários parâmetros e mensagens de controle que não tinham sido especificadas na norma MIDI (como a definição de sons de instrumentos para cada um dos 128 números dos programas). |

|||

[[File:Ented, Nokturn a-moll - Jesienny.ogg|thumb|Exemplo de música criada no formato MIDI]] |

|||

=== Transmissão das Mensagens MIDI === |

|||

[[File:Synth rack @ Choking Sun Studio.jpg|thumb|alt=Several rack-mounted synthesizers that share a single controller|A MIDI permite que vários instrumentos sejam tocados em um único controlador (muitas vezes um teclado, como retratado aqui), o que torna as configurações de palco muito mais portáteis. Este sistema se encaixa em um único case. Antes do advento do MIDI teria exigido quatro teclados distintos, além de mixer externo e [[unidade de efeitos]].]] |

|||

Para transmissão, cada mensagem MIDI é recebida em paralelo por uma UART (''Universal Asyncrhronous Receiver Transmiter'') que converte em um formato serial. Essa comunicação serial será utilizada entre os dispositivos a uma taxa de transmissão de 31.250 bits por segundo (32,000 Kbps). |

|||

'''MIDI''' (abreviação de '''Musical Instrument Digital Interface''') é uma [[norma técnica]] que descreve um [[protocolo]], [[circuito digital|interface digital]] e [[conector elétrico|conectores]] e permite a conexão e a comunicação entre uma grande variedade de instrumentos musicais eletrônicos, [[computador]]es e outros dispositivos relacionados.<ref>{{citation |last=Swift|first= Andrew. |url=http://www.doc.ic.ac.uk/~nd/surprise_97/journal/vol1/aps2/ |title=A brief Introduction to MIDI |work=SURPRISE |publisher=Imperial College of Science Technology and Medicine |date=Maio de 1997 |accessdate=22 da agosto de 2012}}</ref> Uma única ligação MIDI pode carregar até dezesseis canais de informação, cada um dos quais pode ser encaminhado para um dispositivo separado. |

|||

=== Controladores === |

|||

São dispositivos para fabricar mensagens do tipo MIDI |

|||

O MIDI carrega mensagens de evento que especificam [[notação musical|notação]], [[pitch]] e [[velocidade]], sinais de controle para os parâmetros, tais como volume, [[vibrato]], [[panning (áudio)|panning]], pistas e sinais de MIDI clock, que definem e sincronizam [[ritmo]] entre vários dispositivos. Essas mensagens são enviadas através de um cabo MIDI para outros dispositivos onde são controladas a geração de som e outros recursos. Esses dados também podem ser gravados em um dispositivo de hardware ou de software chamado de [[sequenciador]], que pode ser usado para editar os dados e reproduzi-lo em um momento posterior.<ref name="Huber">Huber, David Miles. "The MIDI Manual". Carmel, Indiana: SAMS, 1991.</ref>{{rp|4|date=Novembro de 2012}} |

|||

=== Exclusivos de sistema === |

|||

As mensagens exclusivas de sistema — ''System Exclusive Messages'', abreviadas como ''Sysex'' — são mensagens cuja estrutura é definida especificamente pelo aparelho que irá recebê-la, podendo tal estrutura conter qualquer tipo de dado. Por exemplo, um dispositivo MIDI pode ter uma especificação de mensagens ''sysex'' que contenham caracteres [[ASCII]]. |

|||

A tecnologia MIDI foi padronizada em 1983 por um júri de representantes da indústria da música, e é mantido pela ''MIDI Manufacturers Association'' (MMA). Todos os padrões oficiais MIDI são desenvolvidos e publicados em conjunto pela MMA em Los Angeles, Califórnia, EUA, e para o Japão, pelo MIDI Committee da ''Association of Musical Electronics Industry'' (AMEI), em Tóquio. |

|||

== Formatos == |

|||

=== GM === |

|||

Vantagens do MIDI incluem compacidade (uma canção inteira pode ser codificado em algumas centenas de linhas, ou seja, em poucos [[kilobyte]]s), e também a facilidade de modificação, manipulação e escolha dos instrumentos.<ref>http://www.instructables.com/id/What-is-MIDI/</ref> |

|||

{{Artigo principal|[[General MIDI]]}} |

|||

==História== |

|||

===O desenvolvimento do MIDI=== |

|||

Até o final da década de 1970, os dispositivos musicais eletrônicos foram se tornando cada vez mais comuns e acessíveis na América do Norte, Europa e Japão. [[Sintetizador|Sintetizadores analógicos]] mais antigos eram normalmente monofônicos, e controlados através de uma [[tensão]] produzida por seus teclados. Fabricantes utilizavam esta tensão para ligar instrumentos em conjunto, de modo que um dispositivo poderia controlar um ou mais outros dispositivos, mas este sistema era inadequado para controle de sintetizadores digitais e polifônicos mais recentes.<ref name="Huber"/>{{rp|3|date=Novembro de 2012}} Alguns fabricantes criaram sistemas que permitiram a interligação do seu próprio equipamento, mas os sistemas eram incompatíveis, por isso, os sistemas de um fabricante não podiam sincronizar com os de outro.<ref name="Huber" />{{rp|4|date=Novembro de 2012}} |

|||

Em junho de 1981, o fundador da [[Roland Corporation]], Ikutaro Kakehashi, propôs a idéia de padronização para o fundador da [[Oberheim Electronics]], Tom Oberheim, que então conversou com o presidente da Sequential Circuits, Dave Smith. Em outubro de 1981, Kakehashi, Oberheim e Smith discutiram a idéia com representantes da [[Yamaha]], [[Korg]] e [[Kawai]].<ref name="chadab5100"/> |

|||

Os engenheiros e designers de sintetizadores da Sequential Circuits, Dave Smith e Chet Wood inventaram uma interface universal para sintetizadores, o que permitiria a comunicação direta entre equipamentos de diferentes fabricantes. Smith propôs esta norma na amostra da [[Audio Engineering Society]], em novembro de 1981.<ref name="Huber"/>{{rp|4|date=Novembro de 2012}} Ao longo dos próximos dois anos, o padrão foi discutido e modificado por representantes de empresas como Roland, Yamaha, Korg, Kawai, Oberheim, e Sequential Circuits,<ref name="Holmes">Holmes, Thom. ''Electronic and Experimental Music: Pioneers in Technology and Composition''. New York: Routledge, 2003</ref>{{rp|22|date=Novembro de 2012}} e foi rebatizado de Musical Instrument Digital Interface.<ref name="Huber"/>{{rp|4|date=Novembro de 2012}} O desenvolvimento do MIDI foi anunciada ao público por [[Robert Moog]], na edição de outubro 1982 da revista Keyboard ..<ref name="Manning">Manning, Peter. ''Electronic and Computer Music''. 1985. Oxford: Oxford University Press, 1994. Print.</ref>{{rp|276|date=Novembro de 2012}} |

|||

Até o Winter NAMM Show de janeiro 1983, Smith foi capaz de demonstrar uma conexão MIDI entre o seu sintetizador analógico Prophet 600 e um Roland JP-6. A Especificação MIDI foi publicada em agosto de 1983.<ref name="chadab5100">{{cite journal|last=Chadabe|first=Joel|date=1 de maio de 2000|title=Part IV: The Seeds of the Future|journal=Electronic Musician|publisher=Penton Media|volume=XVI|issue=5|url=http://www.emusician.com/gear/0769/the-electronic-century-part-iv-the-seeds-of-the-future/145415}}</ref> O padrão MIDI foi divulgado por Ikutaro Kakehashi e Dave Smith, que em 2013 receberam o [[Grammy Awards|Technical Grammy Award]] por seu papel-chave no desenvolvimento do MIDI.<ref>http://www.grammy.com/news/technical-grammy-award-ikutaro-kakehashi-and-dave-smith</ref><ref>http://www.grammy.com/videos/technical-grammy-award-recipients-ikutaro-kakehashi-and-dave-smith-at-special-merit-awards</ref> |

|||

<!-- |

|||

===MIDI's impact on the music industry=== |

|||

MIDI's appeal was originally limited to those who wanted to use electronic instruments in the production of popular music. The standard allowed different instruments to speak with each other and with computers, and this spurred a rapid expansion of the sales and production of electronic instruments and music software.<ref name="Holmes" />{{rp|21|date=November 2012}} This intercompatibility allowed one device to be controlled from another, which rid musicians of the need for excessive hardware.<ref>{{cite journal|last=Paul|first=Craner|title=New Tool for an Ancient Art: The Computer and Music|journal=Computers and the Humanities|date=Oct 1991|volume=25|issue=5|pages=308–309|jstor=30204425|doi=10.1007/bf00120967}}</ref> MIDI's introduction coincided with the dawn of the personal computer era and the introductions of samplers, whose ability to play back prerecorded sounds allowed stage performances to include effects that previously were unobtainable outside the studio, and digital synthesizers, which allowed pre-programmed sounds to be stored and recalled with the press of a button.<ref>Macan, Edward. ''Rocking the Classics: English Progressive Rock and the Counterculture''. New York: Oxford University Press, 1997. p.191</ref> The creative possibilities brought about by MIDI technology have been credited as having helped to revive the music industry in the 1980s.<ref>Shuker, Roy. ''Understanding Popular Music''. London: Routledge, 1994. p.286</ref> |

|||

MIDI introduced many capabilities which transformed the way musicians work. MIDI sequencing makes it possible for a user with no notation skills to build complex arrangements.<ref>Demorest, Steven M. ''Building Choral Excellence: Teaching Sight-Singing in the Choral Rehearsal''. New York: Oxford University Press, 2003. p. 17</ref> A musical act with as few as one or two members, each operating multiple MIDI-enabled devices, can deliver a performance which sounds similar to that of a much larger group of musicians.<ref>Pertout, Andrian. ''[http://www.pertout.com/Midi.htm Mixdown Monthly]'', #26. 26 June 1996. Web. 22 August 2012</ref> The expense of hiring outside musicians for a project can be reduced or eliminated,<ref name="Huber"/>{{rp|7|date=November 2012}} and complex productions can be realized on a system as small as a single MIDI workstation, a synthesizer with integrated keyboard and sequencer. Professional musicians can do this in an environment such as a [[home recording]] space, without the need to rent a professional [[recording studio]] and staff. By performing preproduction in such an environment, an artist can reduce recording costs by arriving at a recording studio with a work that is already partially completed. Rhythm and background parts can be sequenced in advance, and then played back onstage.<ref name="Huber"/>{{rp|7–8|date=November 2012}} Performances require less haulage and set-up/tear-down time, due to the reduced amount and variety of equipment and associated connections necessary to produce a variety of sounds.{{citation needed|date=August 2012}} [[Educational technology]] enabled by MIDI has transformed music education.<ref>Crawford, Renee. ''An Australian Perspective: Technology in Secondary School Music". ''Journal of Historical Research in Music Education''. Vol. 30, No. 2. Apr 2009. Print.</ref> |

|||

==Applications== |

|||

===Instrument control=== |

|||

MIDI was invented so that musical instruments could communicate with each other and so that one instrument can control another. Analog synthesizers that have no digital component and were built prior to MIDI's development can be retrofit with kits that convert MIDI messages into analog control voltages.<ref name="Manning" />{{rp|277|date=November 2012}} When a note is played on a MIDI instrument, it generates a digital signal that can be used to trigger a note on another instrument.<ref name="Huber" />{{rp|20|date=November 2012}} The capability for remote control allows full-sized instruments to be replaced with smaller sound modules, and allows musicians to combine instruments to achieve a fuller sound, or to create combinations such as acoustic piano and strings.<ref name="Why">Lau, Paul. "[http://www.highbeam.com/doc/1P3-1610624011.html Why Still MIDI?]."{{Subscription required|via=[[HighBeam Research]]}} Canadian Musician. Norris-Whitney Communications Inc. 2008. HighBeam Research. 4 September 2012</ref> MIDI also enables other instrument parameters to be controlled remotely. Synthesizers and samplers contain various tools for shaping a sound. [[Filter (signal processing)|Filters]] adjust [[timbre]], and envelopes automate the way a sound evolves over time.<ref>Sasso, Len. "[http://www.emusician.com/news/0766/sound-programming-101/145154 Sound Programming 101]". ''Electronic Musician''. NewBay Media. 1 October 2002. Web. 4 September 2012.</ref> The frequency of a filter and the envelope attack, or the time it takes for a sound to reach its maximum level, are examples of synthesizer [[parameter]]s, and can be controlled remotely through MIDI. Effects devices have different parameters, such as delay feedback or reverb time. When a MIDI continuous controller number is assigned to one of these parameters, the device will respond to any messages it receives that are identified by that number. Controls such as knobs, switches, and pedals can be used to send these messages. A set of adjusted parameters can be saved to a device's internal memory as a "patch", and these patches can be remotely selected by MIDI program changes. The MIDI standard allows selection of 128 different programs, but devices can provide more by arranging their patches into banks of 128 programs each, and combining a program change message with a bank select message.<ref>Anderton, Craig. "[http://www.soundonsound.com/sos/1995_articles/may95/midiforguitarists.html MIDI For Guitarists: A Crash Course In MIDI Effects Control]". ''Sound On Sound''. SOS Publications. May 1995.</ref> |

|||

===Composition=== |

|||

{{Listen |

|||

| filename = Drum sample.mid |

|||

| title = Drum sample 1 |

|||

| alt = |

|||

| description = Drum sample 1 |

|||

| filename2 = Drum sample2.mid |

|||

| title2 = Drum sample 2 |

|||

| alt2 = |

|||

| description2 = Drum sample 2 |

|||

| filename3 = Bass sample.mid |

|||

| title3 = Bass sample 1 |

|||

| alt3 = |

|||

| description3 = Bass sample 1 |

|||

| filename4 = Bass sample2.mid |

|||

| title4 = Bass sample 2 |

|||

| alt4 = |

|||

| description4 = Bass sample 2 |

|||

| filename5 = MIDI sample.mid |

|||

| title5 = Combination |

|||

| alt5 = |

|||

| description5 = A combination of the previous four files, with [[piano]], [[jazz guitar]], a [[Hi-hat (instrument)|hi-hat]] and four extra [[Bar (music)|measures]] added to complete the short song, in [[A minor]] |

|||

| filename6 = MIDI playback sample.ogg |

|||

| title6 = Combination on a synthesizer |

|||

| alt6 = |

|||

| description6 = The previous file being played on a MIDI-compatible [[synthesizer]] |

|||

}} |

|||

MIDI events can be sequenced with [[List of MIDI editors and sequencers|computer software]], or in specialized hardware [[music workstation]]s. Many [[digital audio workstation]]s (DAWs) are specifically designed to work with MIDI as an integral component. MIDI [[piano roll]]s have been developed in many DAWs so that the recorded MIDI messages can be extensively modified.<ref>{{cite web|title=Digital audio workstation – Intro |url=http://homerecording.guidento.com/daw.htm |archivedate=10 January 2012 |archiveurl=http://web.archive.org/web/20120110031303/http://homerecording.guidento.com/daw.htm}}</ref>{{better source|date=August 2012}} These tools allow composers to audition and edit their work much more quickly and efficiently than did older solutions, such as [[multitrack recording]]. They improve the efficiency of composers who lack strong pianistic abilities, and allow untrained individuals the opportunity to create polished arrangements.<ref name="Muse">McCutchan, Ann. ''The Muse That Sings: Composers Speak about the Creative Process''. New York: Oxford University Press, 1999. p. 67-68,72</ref>{{rp|67–8,72|date=November 2012}} |

|||

Because MIDI is a set of commands that create sound, MIDI sequences can be manipulated in ways that prerecorded audio cannot. It is possible to change the key, instrumentation or tempo of a MIDI arrangement,<ref name="Brewster" />{{rp|227|date=November 2012}} and to reorder its individual sections.<ref>Campbell, Drew. ""Click, Click. Audio" ''Stage Directions''. Vol. 16, No. 3. Mar 2003.</ref> The ability to compose ideas and quickly hear them played back enables composers to experiment.<ref name="Muse" />{{rp|175|date=November 2012}} [[Algorithmic composition]] programs provide computer-generated performances that can be used as song ideas or accompaniment.<ref name="Huber" />{{rp|122|date=November 2012}} |

|||

Some composers may take advantage of [[MIDI 1.0]] and [[General MIDI|General MIDI (GM)]] technology to allow musical data files to be shared among various electronic instruments by using a standard, portable set of commands and parameters. The data composed via the sequenced MIDI recordings can be saved as a Standard MIDI File (SMF), digitally distributed, and reproduced by any computer or electronic instrument that also adheres to the same MIDI, GM, and SMF standards. MIDI data files are much smaller than recorded [[audio file]]s. |

|||

===MIDI and computers=== |

|||

At the time of MIDI's introduction, the computing industry was mainly devoted to [[mainframe computer]]s, and [[personal computer]]s were not commonly owned. The personal computer market stabilized at the same time that MIDI appeared, and computers became a viable option for music production.<ref name="Manning" />{{rp|324|date=November 2012}} In the years immediately after the 1983 ratification of the MIDI specification, MIDI features were adapted to several early computer platforms, including [[Apple II Plus]], [[Apple IIe|IIe]] and [[Apple Macintosh|Macintosh]], [[Commodore 64]] and [[Commodore Amiga|Amiga]], [[Atari ST]], [[Acorn Archimedes]], and [[PC DOS]].<ref name="Manning" />{{rp|325–7|date=November 2012}} The Macintosh was the favorite among US musicians, as it was marketed at a competitive price, and would be several years before PC systems would catch up to its efficiency and [[WIMP (computing)|graphical interface]]{{Citation needed|date=October 2014}}. The Atari ST was favored in Europe, where Macintoshes were more expensive.<ref name="Manning" />{{rp|324–5, 331|date=November 2012}} Apple computers included audio hardware that was more advanced than that of their competitors. The [[Apple IIGS]] used a digital sound chip designed for the [[Ensoniq Mirage]] synthesizer, and later models used a custom sound system and upgraded processors, which drove other companies to improve their own offerings.<ref name="Manning" />{{rp|326,328|date=November 2012}} The Atari ST was favored for its MIDI ports that were built directly into the computer.<ref name="Manning" />{{rp|329|date=November 2012}} Most music software in MIDI's first decade was published for either the Apple or the Atari.<ref name="Manning" />{{rp|335|date=November 2012}} By the time of [[Windows 3.0]]'s 1990 release, PCs had caught up in processing power and had acquired a graphical interface,<ref name="Manning" />{{rp|325|date=November 2012}} and software titles began to see release on multiple platforms.<ref name="Manning" />{{rp|335|date=November 2012}} |

|||

====Standard MIDI files==== |

|||

The Standard MIDI File (SMF) is a [[file format]] that provides a standardized way for sequences to be saved, transported, and opened in other systems. The compact size of these files has led to their widespread use in computers, mobile phone [[ringtone]]s, webpage authoring and greeting cards. They are intended for universal use, and include such information as note values, timing and track names. Lyrics may be included as [[metadata]], and can be displayed by karaoke machines.<ref>Hass, Jeffrey. "[http://www.indiana.edu/%7Eemusic/etext/MIDI/chapter3_MIDI10.shtml Chapter Three: How MIDI works 10]". Indiana University Jacobs School of Music. 2010. Web 13 August 2012</ref> The SMF specification was developed and is maintained by the MMA. SMFs are created as an export format of software sequencers or hardware workstations. They organize MIDI messages into one or more parallel [[Multitrack recording|tracks]], and timestamp the events so that they can be played back in sequence. A [[Header (computing)|header]] contains the arrangement's track count, tempo and which of three SMF formats the file is in. A type 0 file contains the entire performance, merged onto a single track, while type 1 files may contain any number of tracks that are performed in synchrony. Type 2 files are rarely used<ref>"[http://www.midi.org/aboutmidi/tut_midifiles.php MIDI Files]". ''midi.org'' Music Manufacturers Association. n.d. Web. 27 August 2012</ref> and store multiple arrangements, with each arrangement having its own track and intended to be played in sequence. |

|||

[[Microsoft Windows]] bundles SMFs together with [[Downloadable Sounds]] (DLS) in a [[Resource Interchange File Format]] (RIFF) wrapper, as RMID files with a <code>.rmi</code> extension. RIFF-RMID has been [[Deprecation|deprecated]] in favor of Extensible Music Files ([[XMF]]).<ref>"[http://www.digitalpreservation.gov/formats/fdd/fdd000120.shtml RIFF-based MIDI File Format]". ''digitalpreservation.gov''. Library of Congress. 26 March 2012. Web. 18 August 2012</ref> |

|||

====File sharing==== |

|||

A MIDI file is not a recording of actual music. Rather, it is a spreadsheet-like set of instructions, and can use a thousand times less disk space than the equivalent recorded audio.<ref name="Crawford">Crawford, Walt. "MIDI and Wave: Coping with the Language". ''Online''. Vol. 20, No. 1. Jan/Feb 1996</ref> This made MIDI file arrangements an attractive way to share music, before the advent of [[broadband internet access]] and multi-gigabyte hard drives. Licensed MIDI files on [[floppy disk]]s were commonly available in stores in Europe and Japan during the 1990s.<ref>"MIDI Assoc. pushes for new licensing agreement. (MIDI Manufacturers Association)." Music Trades. Music Trades Corp. 1996. HighBeam Research. 4 September 2012 {{Subscription required|via=[[HighBeam Research]]}}</ref> The major drawback to this is the wide variation in quality of users' audio cards, and in the actual audio contained as samples or synthesized sound in the card that the MIDI data only refers to symbolically. Even a sound card that contains high-quality sampled sounds can have inconsistent quality from one instrument to another,<ref name="Crawford" /> while different model cards have no guarantee of consistent sound of the same instrument. Early budget cards, such as the [[AdLib]] and the [[Sound Blaster]] and its compatibles, used a stripped-down version of Yamaha's [[frequency modulation synthesis]] (FM synthesis) technology<ref name="WiffenFM">Wiffen, Paul. "[http://www.soundonsound.com/sos/1997_articles/sep97/synthschool3.html Synth School, Part 3: Digital Synthesis (FM, PD & VPM)]". ''Sound on Sound'' Sep 1997. Print.</ref> played back through low-quality digital-to-analog converters. The low-fidelity reproduction<ref name="Crawford" /> of these ubiquitous<ref name="WiffenFM" /> cards was often assumed to somehow be a property of MIDI itself. This created a perception of MIDI as low-quality audio, while in reality MIDI itself contains no sound,<ref name="Battino" /> and the quality of its playback depends entirely on the quality of the sound-producing device (and of samples in the device).<ref name="Brewster" />{{rp|227|date=November 2012}} |

|||

--> |

|||

==Softwares MIDI== |

|||

A principal vantagem do computador pessoal em um sistema MIDI é que ele pode servir a vários propósitos diferentes, dependendo do software que é carregado.<ref name="Huber" />{{rp|55|date=Novembro de 2012}} [[Multitarefa|Computadores mutitarefas]] permitem a operação simultânea de programas que podem ser capazes de compartilhar dados uns com os outros.<ref name="Huber" />{{rp|65|date=Novembro de 2012}} |

|||

== Softwares == |

|||

Alguns [[software]]s que trabalham com MIDI: |

Alguns [[software]]s que trabalham com MIDI: |

||

* [[Ableton Live]] |

* [[Ableton Live]] |

||

* |

* Anvil Studio |

||

* [[Band-in-a-Box]] |

* [[Band-in-a-Box]] |

||

* BRELS MIDI Editor (GNU/GPL) |

|||

* [[Cakewalk Sonar]] |

* [[Cakewalk Sonar]] |

||

* [[FL Studio]] |

* [[FL Studio]] |

||

* [[Logic Pro]] |

* [[Logic Pro]] |

||

* Max/MSP/Jitter |

|||

* NEMIDi |

|||

* [[Nero]] |

|||

* NIAONiao |

|||

* [[Steinberg Cubase]] |

* [[Steinberg Cubase]] |

||

* [[Steinberg Nuendo]] |

* [[Steinberg Nuendo]] |

||

* [[Pro Tools]] |

* [[Pro Tools]] |

||

* [[Guitar Pro]] |

* [[Guitar Pro]] |

||

* [[Pure Data]] |

|||

* [[Reason]] |

* [[Reason]] |

||

* |

* Reaper |

||

* [[Sony ACID Pro]] |

|||

* [[Rosegarden]] |

* [[Rosegarden]] |

||

* Sonar X1 |

|||

* [[Pure Data]] |

|||

* [[ |

* [[Sony ACID Pro]] |

||

* [[Nero 7]] |

|||

* [[Sonar X1]] |

|||

* [[NEMIDi]] |

|||

* [http://midi.brels.com BRELS MIDI Editor (GNU/GPL)] |

|||

* [[Vocaloid]] |

|||

* [[UTAU]] |

* [[UTAU]] |

||

* [[ |

* [[Vocaloid]] |

||

<!-- |

|||

=====Sequencers===== |

|||

{{main|Digital audio workstation}} |

|||

Sequencing software provides a number of benefits to a composer or arranger. It allows recorded MIDI to be manipulated using standard computer editing features such as [[cut, copy and paste]] and [[drag and drop]]. [[Keyboard shortcut]]s can be used to streamline workflow, and editing functions are often selectable via MIDI commands. The sequencer allows each channel to be set to play a different sound, and gives a graphical overview of the arrangement. A variety of editing tools are made available, including a notation display that can be used to create printed parts for musicians. Tools such as [[Loop (music)|looping]], [[quantization (music)|quantization]], randomization, and [[transposition (music)|transposition]] simplify the arranging process. [[Beats (music)|Beat]] creation is simplified, and [[groove (music)|groove]] templates can be used to duplicate another track's rhythmic feel. Realistic expression can be added through the manipulation of real-time controllers. Mixing can be performed, and MIDI can be synchronized with recorded audio and video tracks. Work can be saved, and transported between different computers or studios.<ref>Gellerman, Elizabeth. "Audio Editing SW Is Music to Multimedia Developers' Ears". ''Technical Horizons in Education Journal''. Vol. 22, No. 2. Sep 1994</ref><ref name="Desmond">Desmond, Peter. "ICT in the Secondary Music Curriculum". ''Aspects of Teaching Secondary Music: Perspectives on Practice''. ed. Gary Spruce. New York: RoutledgeFalmer, 2002</ref>{{rp|164–6|date=November 2012}} |

|||

Sequencers may take alternate forms, such as drum pattern editors that allow users to create beats by clicking on pattern grids,<ref name="Huber" />{{rp|118|date=November 2012}} and loop sequencers such as [[ACID Pro]], which allow MIDI to be combined with prerecorded audio loops whose tempos and keys are matched to each other. Cue list sequencing is used to trigger dialogue, sound effect, and music cues in stage and broadcast production.<ref name="Huber" />{{rp|121|date=November 2012}} |

|||

=={{Ligações externas}}== |

|||

[http://www.academiamusical.com.pt/mostrar.php?idd=81 O que é o MIDI] |

|||

=====Notation/scoring software===== |

|||

[http://www.outrosventos.com.br/portal/tecnologia/computacao-musical/midi-saiba-o-que-e-e-como-funciona.html MIDI, saiba o que é e como funciona] |

|||

{{main|Scorewriter}} |

|||

With MIDI, notes played on a keyboard can automatically be transcribed to [[sheet music]].<ref name="Holmes">Holmes, Thom. ''Electronic and Experimental Music: Pioneers in Technology and Composition''. New York: Routledge, 2003</ref>{{rp|213|date=November 2012}} [[Scorewriter|Scorewriting]], or notation software typically lacks advanced sequencing tools, and is optimized for the creation of a neat, professional printout designed for live instrumentalists.<ref name="Desmond" />{{rp|157|date=November 2012}} These programs provide support for dynamics and expression markings, chord and lyric display, and complex score styles.<ref name="Desmond" />{{rp|167|date=November 2012}} Software is available that can print scores in [[braille]].<ref>Solomon, Karen. "[http://www.wired.com/culture/lifestyle/news/2000/02/34495 You Gotta Feel the Music]". ''wired.com''. Condé Nast. 27 February 2000. Web. 13 August 2012.</ref> |

|||

DoReMIR Music Research's [[Scorecloud|ScoreCloud]] is widely recognised as the best tool for real time transcription from MIDI to scores.<ref>Inglis, Sam. "[http://www.soundonsound.com/sos/jan15/articles/scorecloud.htm ScoreCloud Studio]". ''Sound On Sound''. SOS Publications. Jan 2015. Print.</ref> |

|||

[http://br.nemidi.com Editor de Midi Online] |

|||

Musitek's [[SmartScore]] (formerly MIDIScan) software performs the reverse process, and can produce MIDI files from [[Image scanner|scanned]] sheet music.<ref>Cook, Janet Harniman. "[http://www.soundonsound.com/sos/dec98/articles/midiscan.265.htm Musitek Midiscan v2.51]". ''Sound On Sound''. SOS Publications. Dec 1998. Print.</ref> |

|||

[http://shdo.com.br/softwares/e2mc E2MC Piano Virtual - Ambiente para controle MIDI (Versão Alfa)] |

|||

Prominent notation programs include [[Finale (software)|Finale]], published by MakeMusic, and [[Encore (software)|Encore]], originally published by [[Passport Designs Inc.]], but now by [[GVOX]]. [[Sibelius (software)|Sibelius]], originally created for [[RISC]]-based [[Acorn computers]], was so well-regarded that, before Windows and Macintosh versions were available, composers would purchase Acorns for the sole purpose of using Sibelius.<ref>"[http://www.computinghistory.org.uk/articles/25.htm The Social Impact of Computers- One Man's Story]". ''computinghistory.org.uk''. n.p. n.d. 1 August 2012</ref> |

|||

[http://shdo.com.br/softwares/archives/479 Apresentação Introdução ao MIDI, 66 slides.] |

|||

=====Editor/librarians===== |

|||

== {{Ver também}} == |

|||

Patch editors allow users to program their equipment through the computer interface. These became essential with the appearance of complex synthesizers such as the [[Yamaha FS1R]],<ref>Johnson, Derek. "[http://www.soundonsound.com/sos/mar99/articles/yamahafs1r.htm Yamaha FS1R Editor Software]". ''Sound on Sound''. Mar 1999.</ref> which contained several thousand programmable parameters, but had an interface that consisted of fifteen tiny buttons, four knobs and a small LCD.<ref>Johnson, Derek, and Debbie Poyser. "[http://www.soundonsound.com/sos/dec98/articles/yamfs1r.549.htm Yamaha FS1R]". ''Sound on Sound''. Dec 1998.</ref> Digital instruments typically discourage users from experimentation, due to their lack of the feedback and direct control that switches and knobs would provide,<ref name="Gibbs" />{{rp|393|date=November 2012}} but patch editors give owners of hardware instruments and effects devices the same editing functionality that is available to users of software synthesizers.<ref>"[http://www.squest.com/Products/MidiQuest11/index.html Sound Quest MIDI Quest 11 Universal Editor]". ''squest.com''. n.p. n.d. Web. 21 August 2012</ref> Some editors are designed for a specific instrument or effects device, while other, "universal" editors support a variety of equipment, and ideally can control the parameters of every device in a setup through the use of System Exclusive commands.<ref name="Huber"/>{{rp|129|date=November 2012}} |

|||

Patch librarians have the specialized function of organizing the sounds in a collection of equipment, and allow transmission of entire banks of sounds between an instrument and a computer. This allows the user to augment the device's limited patch storage with a computer's much greater disk capacity,<ref name="Huber"/>{{rp|133|date=November 2012}} and to share custom patches with other owners of the same instrument.<ref name="Cakewalk">"[http://www.cakewalk.com/support/kb/reader.aspx/2007013074 Desktop Music Handbook – MIDI]". ''cakewalk.com''. Cakewalk, Inc. 26 November 2010. Web. Retrieved 7 August 2012.</ref> Universal editor/librarians that combine the two functions were once common, and included Opcode Systems' Galaxy and [[Emagic|eMagic]]'s SoundDiver. These programs have been largely abandoned with the trend toward computer-based synthesis, although [[Mark of the Unicorn]]'s (MOTU)'s Unisyn and Sound Quest's Midi Quest remain available. [[Native Instruments]]' Kore was an effort to bring the editor/librarian concept into the age of software instruments.<ref>{{cite web|first=Simon |last=Price |url=http://www.soundonsound.com/sos/jul06/articles/nikore.htm |title=Price, Simon. "Native Instruments Kore". ''Sound on Sound'' Jul 06 |publisher=Soundonsound.com |date= |accessdate=2012-11-27}}</ref> |

|||

=====Auto-accompaniment programs===== |

|||

Programs that can dynamically generate accompaniment tracks are called "auto-accompaniment" programs. These create a full band arrangement in a style that the user selects, and send the result to a MIDI sound generating device for playback. The generated tracks can be used as educational or practice tools, as accompaniment for live performances, or as a songwriting aid. Examples include [[Band-in-a-Box]],<ref name="Bozeman" />{{rp|42|date=November 2012}} which originated on the Atari platform in the 1980s, One Man Band,<ref>"[http://www.1manband.nl/omb/index.htm One Man Band v11 - Live-on-stage arranger]"</ref> Busker,<ref>"[http://www.1manband.nl/busker/index.htm Busker - Score editor and player]"</ref> MiBAC Jazz, SoundTrek JAMMER<ref>"[http://www.soundtrek.com/content/modules.php?name=Content&pa=showpage&pid=25 Jammer Professional 6]". ''soundtrek.com''. SoundTrek. 11 August 2008. Web. Retrieved 7 August 2012.</ref> and DigiBand.<ref>"[http://www.athtek.com/digiband.html AthTek DigiBand 1.4]". ''AthTek.com''. AthTek. 01 May 2013.</ref> |

|||

=====Synthesis and sampling===== |

|||

{{main|Software synthesizer|Software sampler}} |

|||

Computers can use software to generate sounds, which are then passed through a [[digital-to-analog converter]] (DAC) to a loudspeaker system.<ref name="Holmes" />{{rp|213|date=November 2012}} Polyphony, the number of sounds that can be played simultaneously, is dependent on the power of the computer's [[Central Processing Unit]], as are the [[sample rate]] and [[Audio bit depth|bit depth]] of playback, which directly affect the quality of the sound.<ref>Lehrman, Paul D. "[http://www.soundonsound.com/sos/1995_articles/oct95/softwaresynthesis.html Software Synthesis: The Wave Of The Future?]" ''Sound On Sound''. SOS Publications. Oct 1995. Print.</ref> Synthesizers implemented in software are subject to [[Jitter|timing issues]] that are not present with hardware instruments, whose dedicated operating systems are not subject to interruption from background tasks as desktop [[operating systems]] are. These timing issues can cause distortion as recorded tracks lose synchronization with each other, and clicks and pops when sample playback is interrupted. Software synthesizers also exhibit a noticeable [[latency (audio)|delay]] in their sound generation, because computers use an [[Data buffer|audio buffer]] that delays playback and disrupts MIDI timing.<ref name="WalkerTime">Walker, Martin. "[http://www.soundonsound.com/sos/mar01/articles/pcmusician.asp Identifying & Solving PC MIDI & Audio Timing Problems]". ''Sound On Sound''. SOS Publications. Mar 2001. Print.</ref> |

|||

Software synthesis' roots go back as far as the 1950s, when [[Max Mathews]] of [[Bell Labs]] wrote the [[MUSIC-N]] programming language, which was capable of non-real-time sound generation.<ref name="DMM1997">Miller, Dennis. "[http://www.soundonsound.com/sos/1997_articles/may97/softwaresynth2.html Sound Synthesis On A Computer, Part 2]". ''Sound On Sound''. SOS Publications. May 1997. Print.</ref> The first synthesizer to run directly on a host computer's CPU<ref>"[http://www.keyboardmag.com/article/Midi-Ancestors-and-Milestones/2171 MIDI Ancestors and Milestones]". ''keyboardmag.com''. New Bay Media. n.d. Web. 6 August 2012.</ref> was Reality, by Dave Smith's [[Seer Systems]], which achieved a low latency through tight driver integration, and therefore could run only on [[Creative Labs]] soundcards.<ref>Walker, Martin. "[http://www.soundonsound.com/sos/1997_articles/nov97/seerreality.html Reality PC]". ''Sound On Sound''. SOS Publications. Nov 1997. Print.</ref> Some systems use dedicated hardware to reduce the load on the host CPU, as with [[Symbolic Sound Corporation]]'s Kyma System,<ref name="DMM1997" /> and the [[Creamware (software company)|Creamware]]/[[Sonic Core]] Pulsar/SCOPE systems,<ref>Wherry, Mark. "[http://www.soundonsound.com/sos/jun03/articles/creamwarescope.asp Creamware SCOPE]". ''Sound On Sound''. SOS Publications. Jun 2003. Print.</ref> which used several DSP chips hosted on a [[Conventional PCI|PCI card]] to power an entire studio's worth of instruments, effects, and mixers.<ref>Anderton, Craig. "[http://www.keyboardmag.com/article/sonic-core-scope-xite-1/147874 Sonic Core SCOPE Xite-1]". ''keyboardmag.com''. New Bay Media, LLC. n.d. Web.</ref> |

|||

The ability to construct full MIDI arrangements entirely in computer software allows a composer to render a finalized result directly as an audio file.<ref name="Why" /> |

|||

====Game music==== |

|||

Early PC games were distributed on floppy disks, and the small size of MIDI files made them a viable means of providing soundtracks. Games of the [[DOS]] and early Windows eras typically required compatibility with either Ad Lib or SoundBlaster audio cards. These cards used FM synthesis, which generates sound through [[modulation]] of [[sine wave]]s. [[John Chowning]], the technique's pioneer, theorized that the technology would be capable of accurate recreation of any sound if enough sine waves were used, but budget computer audio cards performed FM synthesis with only two sine waves. Combined with the cards' 8-bit audio, this resulted in a sound described as "artificial"<ref>David Nicholson. "[http://www.highbeam.com/doc/1P2-946733.html HARDWARE]."{{Subscription required|via=[[HighBeam Research]]}} The Washington Post. Washingtonpost Newsweek Interactive. 1993. HighBeam Research. 4 September 2012</ref> and "primitive".<ref name="Levy">Levy, David S. "[http://www.highbeam.com/doc/1G1-14803399.html Aztech's WavePower daughtercard improves FM reception. (Aztech Labs Inc.'s wavetable synthesis add-on card for Sound Blaster 16 or Sound Galaxy Pro 16 sound cards) (Hardware Review) (Evaluation).]" Computer Shopper. SX2 Media Labs LLC. 1994. HighBeam Research. 4 September 2012{{Subscription required|via=[[HighBeam Research]]}}</ref> Wavetable [[daughterboard]]s that were later available provided audio samples that could be used in place of the FM sound. These were expensive, but often used the sounds from respected MIDI instruments such as the [[E-mu Proteus]].<ref name="Levy" /> The computer industry moved in the mid-1990s toward wavetable-based soundcards with 16-bit playback, but standardized on a 2MB ROM, a space too small in which to fit good-quality samples of 128 instruments plus drum kits. Some manufacturers used 12-bit samples, and padded those to 16 bits.<ref>Labriola, Don. "[http://www.highbeam.com/doc/1G1-16232686.html MIDI masters: wavetable synthesis brings sonic realism to inexpensive sound cards. (review of eight Musical Instrument Digital Interface sound cards) (includes related articles about testing methodology, pitfalls of wavetable technology, future wavetable developments) (Hardware Review) (Evaluation).]"{{Subscription required|via=[[HighBeam Research]]}} Computer Shopper. SX2 Media Labs LLC. 1994. HighBeam Research. 4 September 2012</ref> |

|||

===Other applications=== |

|||

MIDI has been adopted as a control protocol in a number of non-musical applications. [[MIDI Show Control]] uses MIDI commands to direct stage lighting systems and to trigger cued events in theatrical productions. [[VJ (video performance artist)|VJ]]s and [[Turntablism|turntablists]] use it to cue clips, and to synchronize equipment, and recording systems use it for synchronization and [[Console automation|automation]]. [[Apple Motion]] allows control of animation parameters through MIDI. The 1987 [[first-person shooter]] game ''[[MIDI Maze]]'' and the 1990 [[Atari ST]] [[computer puzzle game]] ''[[Oxyd]]'' used MIDI to network computers together, and kits are available that allow MIDI control over home lighting and appliances.<ref>"[http://midikits.net23.net/midi_10_out/interface_circuits.htm Interface Circuits]". MIDI Kits. n.p. 30 August 2012. Web. 30 August 2012.</ref> |

|||

Despite its association with music devices, MIDI can control any device that can read and process a MIDI command. It is therefore possible to send a spacecraft from earth to another destination in space, control home lighting, heating and air conditioning and even sequence traffic light signals all through MIDI commands. The receiving device or object would require a General MIDI processor, however in this instance, the program changes would trigger a function on that device rather than notes from MIDI instrument. Each function can be set to a timer (also controlled by MIDI) or other condition determined by the devices creator. |

|||

==MIDI devices== |

|||

===Connectors=== |

|||

[[File:Midi ports and cable.jpg|thumb|alt=MIDI connectors and a MIDI cable|MIDI connectors and a MIDI cable.]] |

|||

The cables terminate in a [[DIN connector|180° five-pin DIN connector]]. Standard applications use only three of the five conductors: a [[ground (electricity)|ground]] wire, and a [[Balanced line|balanced pair]] of conductors that carry a +5 volt signal.<ref name="Bozeman">Bozeman, William C. ''Educational Technology: Best Practices from America's Schools''. Larchmont: Eye on Education, 1999.</ref> {{rp|41|date=November 2012}} This connector configuration can only carry messages in one direction, so a second cable is necessary for two-way communication.<ref name="Huber">Huber, David Miles. "The MIDI Manual". Carmel, Indiana: SAMS, 1991.</ref>{{rp|13|date=November 2012}} Some proprietary applications, such as [[Phantom power|phantom-powered]] footswitch controllers, use the spare pins for [[direct current]] (DC) power transmission.<ref>Lockwood, Dave. "[http://www.soundonsound.com/sos/dec01/articles/tcgmajor.asp TC Electronic G Major]". ''Sound On Sound''. SOS Publications. Dec 2001. Print.</ref> |

|||

[[Opto-isolator]]s keep MIDI devices electrically separated from their connectors, which prevents the occurrence of [[Ground loop (electricity)|ground loops]]<ref>Mornington-West, Allen. "Digital Theory". ''Sound Recording Practice''. 4th Ed. Ed. John Borwick. Oxford: Oxford University Press, 1996.</ref>{{rp|63|date=November 2012}} and protects equipment from voltage spikes.<ref name="Manning" />{{rp|277|date=November 2012}} There is no [[Error detection and correction|error detection]] capability in MIDI, so the maximum cable length is set at 15 meters (50 feet) in order to limit [[interference (communication)|interference]].<ref>"[http://www.richmondsounddesign.com/faq.html#midilen Richmond Sound Design – Frequently Asked Questions]". ''richmondsounddesign.com''. Web. 5 August 2012.</ref> |

|||

[[File:MIDI connector2.svg|thumb|right|upright=0.50|alt=Diagram of a MIDI connector|A MIDI connector, showing the pins as numbered.]] |

|||

Most devices do not copy messages from their input to their output port. A third type of port, the "thru" port, emits a copy of everything received at the input port, allowing data to be forwarded to another instrument<ref name="Manning" />{{rp|278|date=November 2012}} in a [[Daisy chain (electrical engineering)|"daisy chain"]] arrangement.<ref name="indiana.edu">Hass, Jeffrey. "[http://www.indiana.edu/%7Eemusic/etext/MIDI/chapter3_MIDI2.shtml Chapter Three: How MIDI works 2]". Indiana University Jacobs School of Music. 2010. Web. 13 August 2012.</ref> Not all devices contain thru ports, and devices that lack the ability to generate MIDI data, such as effects units and sound modules, may not include out ports.<ref name="Gibbs">Gibbs, Jonathan (Rev. by Peter Howell) "Electronic Music". ''Sound Recording Practice'', 4th Ed. Ed. John Borwick. Oxford: Oxford University Press, 1996</ref>{{rp|384|date=November 2012}} |

|||

====Management devices==== |

|||

Each device in a daisy chain adds delay to the system. This is avoided with a MIDI thru box, which contains several outputs that provide an exact copy of the box's input signal. A MIDI merger is able to combine the input from multiple devices into a single stream, and allows multiple controllers to be connected to a single device. A MIDI switcher allows switching between multiple devices, and eliminates the need to physically repatch cables. MIDI [[patch bay]]s combine all of these functions. They contain multiple inputs and outputs, and allow any combination of input channels to be routed to any combination of output channels. Routing setups can be created using computer software, stored in memory, and selected by MIDI program change commands.<ref name="Huber" />{{rp|47–50|date=November 2012}} This enables the devices to function as standalone MIDI routers in situations where no computer is present.<ref name="Huber"/>{{rp|62–3|date=November 2012}} MIDI patch bays also clean up any skewing of MIDI data bits that occurs at the input stage. |

|||

MIDI data processors are used for utility tasks and special effects. These include MIDI filters, which remove unwanted MIDI data from the stream, and MIDI delays, effects which send a repeated copy of the input data at a set time.<ref name="Huber" />{{rp|51|date=November 2012}} |

|||

====Interfaces==== |

|||

A computer MIDI interface's main function is to match clock speeds between the MIDI device and the computer.<ref name="indiana.edu"/> Some computer sound cards include a standard MIDI connector, whereas others connect by any of various means that include the [[D-subminiature]] DA-15 [[game port]], [[USB]], [[FireWire]], [[Ethernet]] or a proprietary connection. |

|||

The increasing use of [[USB]] connectors in the 2000s has led to the availability of MIDI-to-USB data interfaces that can transfer MIDI channels to USB-equipped computers. Some MIDI keyboard controllers are equipped with USB jacks, and can be plugged into computers that run music software. |

|||

MIDI's serial transmission leads to timing problems. Experienced musicians can detect time differences of as small as 1/3 of a millisecond (ms){{citation needed|date=January 2013}} (which is how long it takes sound to travel 4 inches), and a three-byte MIDI message requires nearly 1ms for transmission.<ref>Robinson, Herbie. "[http://lists.apple.com/archives/coreaudio-api/2005/Jul/msg00120.html Re: core midi time stamping]". ''Apple Coreaudio-api Mailing List''. Apple, Inc. 18 July 2005. 8 August 2012.</ref> Because MIDI is serial, it can only send one event at a time. If an event is sent on two channels at once, the event on the higher-numbered channel cannot transmit until the first one is finished, and so is delayed by 1ms. If an event is sent on all channels at the same time, the highest-numbered channel's transmission will be delayed by as much as 16ms. This contributed to the rise of MIDI interfaces with multiple in- and out-ports, because timing improves when events are spread between multiple ports as opposed to multiple channels on the same port.<ref name="WalkerTime" /> The term "MIDI slop" refers to audible timing errors that result when MIDI transmission is delayed.<ref>Shirak, Rob. "[http://www.emusician.com/news/0766/mark-of-the-unicorn/140335 Mark of the Unicorn]". ''emusician.com''. New Bay Media. 1 October 2000. Web. Retrieved 8 August 2012.</ref> |

|||

===Controllers=== |

|||

{{main|MIDI controller}} |

|||

There are two types of MIDI controllers: performance controllers that generate notes and are used to perform music,<ref>"[http://www.rolandmusiced.com/spotlight/article.php?ArticleId=1040 MIDI Performance Instruments]". ''Instruments of Change''. Vol. 3, No. 1 (Winter 1999). Roland Corporation, U.S.</ref> and controllers which may not send notes, but transmit other types of real-time events. Many devices are some combination of the two types. |

|||

====Performance controllers==== |

|||

[[File:Remote 25.jpg|thumb|alt=A Novation Remote 25 two-octave MIDI controller|Two-octave MIDI controllers are popular for use with laptop computers, due to their portability. This unit provides a variety of real-time controllers, which can manipulate various sound design parameters of computer-based or standalone hardware instruments, effects, mixers and recording devices.]] |

|||

MIDI was designed with keyboards in mind, and any controller that is not a keyboard is considered an "alternative" controller.<ref>"[http://www.midi.org/aboutmidi/products.php MIDI Products]". ''midi.org''. MIDI Manufacturers Association. n.d. 1 August 1012</ref> This was seen as a limitation by composers who were not interested in keyboard-based music, but the standard proved flexible, and MIDI compatibility was introduced to other types of controllers, including guitars, wind instruments and drum machines.<ref name="Holmes" />{{rp|23|date=November 2012}} |

|||

=====Keyboards===== |

|||

{{main|MIDI keyboard}} |

|||

[[Musical keyboard|Keyboard]]s are by far the most common type of MIDI controller.<ref name="Cakewalk" /> These are available in sizes that range from 25-key, 2-octave models, to full-sized 88-key instruments. Some are keyboard-only controllers, though many include other real-time controllers such as sliders, knobs, and wheels.<ref>{{cite web|title=The beginner's guide to: MIDI controllers|url=http://www.musicradar.com/tuition/tech/the-beginners-guide-to-midi-controllers-179018|publisher=Computer Music Specials|accessdate=11 July 2011}}</ref> Commonly, there are also connections for [[Sustain pedal|sustain]] and [[expression pedal]]s. Most keyboard controllers offer the ability to split the playing area into "zones", which can be of any desired size and can overlap with each other. Each zone can respond to a different MIDI channel and a different set of performance controllers, and can be set to play any desired range of notes. This allows a single playing surface to target a number of different devices.<ref name="Huber" />{{rp|79–80|date=November 2012}} MIDI capabilities can also be built into traditional keyboard instruments, such as [[grand piano]]s<ref name="Huber" />{{rp|82|date=November 2012}} and [[Rhodes piano]]s.<ref>"[http://www.keyboardmag.com/article/Rhodes-Mark-7/1896 Rhodes Mark 7]". ''keyboardmag.com''. New Bay Media, LLC. n.d. Web. Retrieved 7 August 2012.</ref> [[Pedal keyboard]]s can operate the pedal tones of a MIDI organ, or can drive a bass synthesizer such as the revived [[Moog Taurus]]. |

|||

[[File:Onyx The Digital Pied Piper.jpg|thumb|left|alt=A performer playing a MIDI wind controller|MIDI wind controllers can produce expressive, natural-sounding performances in a way that is difficult to achieve with keyboard controllers.]] |

|||

=====Wind controllers===== |

|||

{{main|Wind controller}} |

|||

Wind controllers allow MIDI parts to be played with the same kind of expression and articulation that is available to players of wind and brass instruments. They allow breath and pitch glide control that provide a more versatile kind of phrasing, particularly when playing sampled or [[Physical modelling synthesis|physically modeled]] wind instrument parts.<ref name="Huber" />{{rp|95|date=November 2012}} A typical wind controller has a sensor that converts breath pressure to volume information, and may allow pitch control through a lip pressure sensor and a pitch-bend wheel. Some models include a configurable key layout that can emulate different instruments' fingering systems.<ref>White, Paul. "[http://www.soundonsound.com/sos/jul98/articles/yamwx5.html Yamaha WX5]". ''Sound On Sound''. SOS Publications. Jul 1998. Print.</ref> Examples of such controllers include [[Akai]]'s [[EWI (musical instrument)|Electronic Wind Instrument]] (EWI) and Electronic Valve Instrument (EVI). The EWI uses a system of keypads and rollers modeled after a traditional [[woodwind instrument]], while the EVI is based on an acoustic [[brass instrument]], and has three switches that emulate a [[trumpet]]'s valves.<ref name="Manning" />{{rp|320–321|date=November 2012}} |

|||

=====Drum and percussion controllers===== |

|||

[[File:V-drums-2.jpg|thumb|right|alt=A MIDI drum kit|Drum controllers, such as the [[Roland V-Drums]], are often built in the form of an actual drum kit. The unit's sound module is mounted to the left.]] |

|||

Keyboards can be used to trigger drum sounds, but are impractical for playing repeated patterns such as rolls, due to the length of key travel. After keyboards, drum pads are the next most significant MIDI performance controllers.<ref name="Manning" />{{rp|319–320|date=November 2012}} Drum controllers may be built into drum machines, may be standalone control surfaces, or may emulate the look and feel of acoustic percussion instruments. The pads built into drum machines are typically too small and fragile to be played with sticks, and are played with fingers.<ref name="Huber" />{{rp|88|date=November 2012}} Dedicated drum pads such as the [[Roland Octapad]] or the [[DrumKAT]] are playable with the hands or with sticks, and are often built in the form of a drum kit. There are also percussion controllers such as the [[vibraphone]]-style [[MalletKAT]],<ref name="Huber" />{{rp|88–91|date=November 2012}} and [[Don Buchla]]'s [[Marimba Lumina]].<ref>{{cite web|url=http://www.buchla.com/mlumina/description.html |title="Marimba Lumina Described". ''buchla.com''. n.p. n.d. Web |publisher=Buchla.com |date= |accessdate=2012-11-27}}</ref> MIDI triggers can also be installed into acoustic drum and percussion instruments. Pads that can trigger a MIDI device can be homemade from a [[piezoelectric sensor]] and a practice pad or other piece of foam rubber.<ref>White, Paul. "[http://www.soundonsound.com/sos/1995_articles/aug95/diydrumpads.html DIY Drum Pads And Pedal Triggers]". ''Sound On Sound'' SOS Publications. Aug 1995. Print.</ref> |

|||

=====Stringed instrument controllers===== |

|||

A guitar can be fit with special [[Pickup (music technology)|pickups]] that digitize the instrument's output, and allow it to play a synthesizer's sounds. These assign a separate MIDI channel for each string, and may give the player the choice of triggering the same sound from all six strings, or playing a different sound from each.<ref name="Huber" />{{rp|92–93|date=November 2012}} Some models, such as Yamaha's G10, dispense with the traditional guitar body and replace it with electronics.<ref name="Manning" />{{rp|320|date=November 2012}} Other systems, such as Roland's MIDI pickups, are included with or can be retrofitted to a standard instrument. Max Mathews designed a MIDI violin for [[Laurie Anderson]] in the mid-1980s,<ref>Goldberg, Roselee. ''Laurie Anderson''. New York: Abrams Books, 2000. p.80</ref> and MIDI-equipped violas, cellos, contrabasses, and mandolins also exist.<ref>Batcho, Jim. "Best of Both Worlds". ''Strings'' 17.4 (2002): n.a. Print.</ref> |

|||

[[File:Akai SynthStation 25.jpg|thumb|left|alt=A MIDI controller for use with an iPhone|A MIDI controller designed for use with an [[iPhone]]. The phone docks in the center.]] |

|||

=====Specialized performance controllers===== |

|||

[[DJ digital controller]]s may be standalone units such as the Faderfox or the [[Allen & Heath]] Xone 3D, or may be integrated with a specific piece of software, such as [[Traktor]] or [[Scratch Live]]. These typically respond to MIDI clock sync, and provide control over mixing, looping, effects, and sample playback.<ref>Price, Simon. "[http://www.soundonsound.com/sos/sep06/articles/allenheathxone.htm Allen & Heath Xone 3D]". ''Sound On Sound''. SOS Publications. Sep 2006. Print.</ref> |

|||

MIDI triggers attached to shoes or clothing are sometimes used by stage performers. The Kroonde Gamma wireless sensor can capture physical motion as MIDI signals.<ref>{{cite paper|id = {{citeseerx|10.1.1.84.8862}}|title=Wireless gesture controllers to affect information sonification|first=Kirsty|last=Beilharz}}</ref> Sensors built into a dance floor at the [[University of Texas at Austin]] convert dancers' movements into MIDI messages,<ref>{{cite paper | last1 = Pinkston | last2 = Kerkhoff | last3 = McQuilken | title = The U. T. Touch-Sensitive Dance Floor and MIDI Controller | publisher = The University of Texas at Austin | date = 10 August 2012 }}</ref> and [[David Rokeby]]'s ''Very Nervous System'' [[art installation]] created music from the movements of passers-through.<ref>Cooper, Douglas. "[http://www.wired.com/wired/archive/3.03/rokeby.html Very Nervous System]". ''Wired''. Condé Nast. 3.03: Mar 1995.</ref> Software applications exist which enable the use of [[iOS]] devices as gesture controllers.<ref>"[http://midiinmotion.fschwehn.com/the-glimpse/ The Glimpse]". ''midiinmotion.fschwehn.com''. n.p. n.d. Web. 20 August 2012</ref> |

|||

Numerous experimental controllers exist which abandon traditional musical interfaces entirely. These include the gesture-controlled [[Buchla Thunder]],<ref>{{cite web|url=http://www.buchla.com/historical/thunder/ |title="Buchla Thunder". ''buchla.com''. Buchla and Associates. n.d. Web |publisher=Buchla.com |date= |accessdate=2012-11-27}}</ref> sonomes such as the C-Thru Music Axis,<ref>"[http://www.midi.org/aboutmidi/products.php#NonTrad MIDI Products]". ''midi.org''. The MIDI Manufacturers Association. n.d. Web. 10 August 2012.</ref> which rearrange the scale tones into an isometric layout,<ref>{{cite web|url=http://www.theshapeofmusic.com/note-pattern.php |title="Note pattern". ''theshapeofmusic.com''. n.p. n.d. Web. 10 Aug 2012 |publisher=Theshapeofmusic.com |date= |accessdate=2012-11-27}}</ref> and Haken Audio's keyless, touch-sensitive [[Continuum (instrument)|Continuum]] playing surface.<ref>{{cite web|url=http://www.hakenaudio.com/Continuum/hakenaudioovervg.html |title="Overview". ''hakenaudio.com''. Haken Audio. n.d. Web. 10 Aug 2012 |publisher=Hakenaudio.com |date= |accessdate=2012-11-27}}</ref> Experimental MIDI controllers may be created from unusual objects, such as an ironing board with heat sensors installed,<ref>Gamboa, Glenn. "[http://www.wired.com/entertainment/music/news/2007/09/handmademusic MIDI Ironing Boards, Theremin Crutches Squeal at Handmade Music Event]". ''Wired.com''. Condé Nast. 27 September 2007. 13 August 2012. Web.</ref> or a sofa equipped with pressure sensors.<ref>"[http://www.midi.org/aboutmidi/products.php#NonTrad MIDI Products]". ''midi.org''. MIDI Manufacturers Association. n.d. 13 August 2012. Web.</ref> |

|||

====Auxiliary controllers==== |

|||

Software synthesizers offer great power and versatility, but some players feel that division of attention between a MIDI keyboard and a computer keyboard and mouse robs some of the immediacy from the playing experience.<ref>Preve, Francis. "Dave Smith", in "The 1st Annual ''Keyboard'' Hall of Fame". ''Keyboard'' (US). NewBay Media, LLC. Sep 2012. Print. p.18</ref> Devices dedicated to real-time MIDI control provide an ergonomic benefit, and can provide a greater sense of connection with the instrument than can an interface that is accessed through a mouse or a push-button digital menu. Controllers may be general-purpose devices that are designed to work with a variety of equipment, or they may be designed to work with a specific piece of software. Examples of the latter include Akai's APC40 controller for [[Ableton Live]], and Korg's MS-20ic controller that is a reproduction of their [[Korg MS-20|MS-20]] analog synthesizer. The MS-20ic controller includes [[patch cables]] that can be used to control signal routing in their virtual reproduction of the MS-20 synthesizer, and can also control third-party devices.<ref>"[http://www.vintagesynth.com/korg/legacy.php Korg Legacy Collection]". ''vintagesynth.com''. Vintage Synth Explorer. n.d. Web. 21 August 2012</ref> |

|||

=====Control surfaces===== |

|||

Control surfaces are hardware devices that provide a variety of controls that transmit real-time controller messages. These enable software instruments to be programmed without the discomfort of excessive mouse movements,<ref name="WalkerControl">Walker, Martin. "[http://www.soundonsound.com/sos/oct01/articles/pcmusician1001.asp Controlling Influence]". ''Sound On Sound''. SOS Publications. Oct 2001. Print.</ref> or adjustment of hardware devices without the need to step through layered menus. Buttons, sliders, and knobs are the most common controllers provided, but [[rotary encoder]]s, transport controls, joysticks, [[ribbon controller]]s, vector touchpads in the style of Korg's [[Kaoss pad]], and optical controllers such as Roland's [[D-Beam]] may also be present. Control surfaces may be used for mixing, sequencer automation, turntablism, and lighting control.<ref name="WalkerControl" /> |

|||

=====Specialized real-time controllers===== |

|||

[[Audio control surface]]s often resemble [[mixing console]]s in appearance, and enable a level of hands-on control for changing parameters such as sound levels and effects applied to individual tracks of a [[multitrack recording]] or live performance output. |

|||

MIDI footswitches are commonly used to send MIDI program change commands to effects devices, but may be combined with pedals in a pedalboard that allows detailed programming of effects units. Pedals are available in the form of on/off switches, either momentary or latching, or as "rocker" pedals whose position determines the value of a MIDI continuous controller. |

|||

[[Drawbars|Drawbar]] controllers are for use with MIDI and virtual organs. Along with a set of drawbars for timbre control, they may provide controls for standard organ effects such as [[Leslie speaker|rotating speaker]] speed, vibrato and chorus.<ref>{{cite web|url=http://www.voceinc.com/manuals/Dbman_r3.htm#What |title="Drawbar Manual v. 1.2". Voce, Inc. n.d. Web. 10 Aug 2012 |publisher=Voceinc.com |date= |accessdate=2012-11-27}}</ref> |

|||

===Instruments=== |

|||

[[File:Korg 05RW front.jpg|thumb|alt=A General MIDI sound module.|A sound module, which requires an external controller (e.g., a MIDI keyboard) to trigger its sounds. These devices are highly portable, but their limited programming interface requires computer-based tools for comfortable access to their sound parameters.]] |

|||

A MIDI instrument contains ports to send and receive MIDI signals, a CPU to process those signals, an interface that allows user programming, audio circuitry to generate sound, and controllers. The operating system and factory sounds are often stored in a [[Read-only memory]] (ROM) unit.<ref name="Huber" />{{rp|67–70|date=November 2012}} |

|||

A MIDI Instrument can also be a stand-alone module (without a piano style keyboard) consisting of a General MIDI soundboard (GM, GS and/XG), onboard editing, including transposing/pitch changes, MIDI instrument changes and adjusting volume, pan, reverb levels and other MIDI controllers. Typically, the MIDI Module will include a large screen, enabling the user to view information depending on the function selected at that time. Features can include scrolling lyrics, usually embedded in a MIDI File or Karaoke MIDI, playlists, song library and editing screens. Some MIDI Modules include a Harmonizer and the ability to playback and transpose MP3 audio files. |

|||

====Synthesizers==== |

|||

{{main|Synthesizer}} |

|||

Synthesizers may employ any of a variety of sound generation techniques. They may include an integrated keyboard, or may exist as "sound modules" or "expanders" that generate sounds when triggered by an external controller. Sound modules are typically designed to be mounted in a [[19-inch rack]].<ref name="Huber" />{{rp|70–72|date=November 2012}} Manufacturers commonly produce a synthesizer in both standalone and rack-mounted versions, and often offer the keyboard version in a variety of sizes. |

|||

====Samplers==== |

|||

{{main|Sampler (musical instrument)}} |

|||

A [[sampler (musical instrument)|sampler]] can record and digitize audio, store it in [[random-access memory]] (RAM), and play it back. Samplers typically allow a user to edit a [[Sampling (signal processing)|sample]] and save it to a hard disk, apply effects to it, and shape it with the same tools that synthesizers use. They also may be available in either keyboard or rack-mounted form.<ref name="Huber" />{{rp|74–8|date=November 2012}} Instruments that generate sounds through sample playback, but have no recording capabilities, are known as "[[Rompler|ROMplers]]". |

|||

Samplers did not become established as viable MIDI instruments as quickly as synthesizers did, due to the expense of memory and processing power at the time.<ref name="Manning" />{{rp|295|date=November 2012}} The first low-cost MIDI sampler was the [[Ensoniq]] Mirage, introduced in 1984.<ref name="Manning" />{{rp|304|date=November 2012}} MIDI samplers are typically limited by displays that are too small to use to edit sampled waveforms, although some can be connected to a computer monitor.<ref name="Manning" />{{rp|305|date=November 2012}} |

|||

====Drum machines==== |

|||

{{main|Drum machine}} |

|||

Drum machines typically are sample playback devices that specialize in drum and percussion sounds. They commonly contain a sequencer that allows the creation of drum patterns, and allows them to be arranged into a song. There often are multiple audio outputs, so that each sound or group of sounds can be routed to a separate output. The individual drum voices may be playable from another MIDI instrument, or from a sequencer.<ref name="Huber" />{{rp|84|date=November 2012}} |

|||

====Workstations and hardware sequencers==== |

|||

{{main|Music workstation|Music sequencer}} |

|||

[[File:Tenori-on.jpg|thumb|alt=A button matrix MIDI controller|Yamaha's [[Tenori-on]] controller allows arrangements to be built by "drawing" on its array of lighted buttons. The resulting arrangements can be played back using its internal sounds or external sound sources, or recorded in a computer-based sequencer.]] |

|||

Sequencer technology predates MIDI. [[Analog sequencer]]s use [[CV/Gate]] signals to control pre-MIDI analog synthesizers. MIDI sequencers typically are operated by transport features modeled after those of [[Tape recorder|tape decks]]. They are capable of recording MIDI performances, and arranging them into individual tracks along a [[multitrack recording]] concept. Music workstations combine controller keyboards with an internal sound generator and a sequencer. These can be used to build complete arrangements and play them back using their own internal sounds, and function as self-contained music production studios. They commonly include file storage and transfer capabilities.<ref name="Huber" />{{rp|103–4|date=November 2012}} |

|||

===Effects devices=== |

|||

{{main|Effects unit}} |

|||

Audio effects units that are frequently used in stage and recording, such as [[reverberation|reverbs]], [[Delay (audio effect)|delays]] and [[chorus effect#Electronic effect|choruses]], can be remotely adjusted via MIDI signals. Some units allow only a limited number of parameters to be controlled this way, but most will respond to program change messages. The [[Eventide, Inc|Eventide]] H3000 [[Harmonizer|Ultra-harmonizer]] is an example of a unit that allows such extensive MIDI control that it is playable as a synthesizer.<ref name="Manning">Manning, Peter. ''Electronic and Computer Music''. 1985. Oxford: Oxford University Press, 1994. Print.</ref>{{rp|322|date=November 2012}} |

|||

--> |

|||

==Especificações técnicas== |

|||

As mensagens MIDI são compostas de informações de 8 bits (comumente chamado de [[byte]]s) que são transmitidas em [[comunicação serial|série]] à 31,25 [[bitrate|kbit/s]]. Esta taxa foi escolhida porque é a divisão exata de 1 MHz, a velocidade a que muitos dos primeiros [[microprocessador]]es operavam.<ref name="Manning" />{{rp|286|date=Novembro de 2012}} O primeiro bit de cada dado identifica se a informação é um byte de estado ou de um byte de dados, e é seguido por sete bits de informação.<ref name="Huber" />{{rp|13–14|date=November 2012}} Um bit de partida e um bit de parada são adicionados a cada byte para fins de enquadramento, de modo que um byte MIDI requer dez bits para a transmissão.<ref name="Manning" />{{rp|286|date=Novembro de 2012}} |

|||

<!-- Para transmissão, cada mensagem MIDI é recebida em paralelo por uma UART (''Universal Asyncrhronous Receiver Transmiter'') que converte em um formato serial. Essa comunicação serial será utilizada entre os dispositivos a uma taxa de transmissão de 31.250 bits por segundo (32,000 Kbps).{{verificar credibiblidade}} --> |

|||

Um link MIDI pode transportar dezesseis canais independentes de informação. Os canais são numerados de 1 à 16, mas a sua [[código binário|codificação binária]] real correspondente é de 0 à 15. Um dispositivo pode ser configurado para escutar somente canais específicos e ignorar as mensagens enviadas em outros canais (modo "Omni Off"), ou pode ouvir todos os canais, efetivamente ignorando o endereço do canal (modo "Omni On"). Um dispositivo individual pode ser [[monofonia|monofônico]] (o início de um novo comando MIDI "note-on" implica no fim da nota anterior), ou [[polifonia|polifônico]] (várias notas podem soar ao mesmo tempo, até que o limite de polifonia do instrumento for alcançado, ou as notas cheguem ao final do seu envelope de decaimento, ou comandos MIDI "note-off" explícitos são recebidos). Dispositivos receptores normalmente podem ser definidos para todas as quatro combinações de "omni off/on" versus modos "mono/poli".<ref name="Huber" />{{rp|14–18|date=Novembro de 2012}} |

|||

===Mensagens=== |

|||

Uma mensagem MIDI é uma instrução que controla algum aspecto do dispositivo receptor. Uma mensagem MIDI consiste de um byte de estado, que indica o tipo da mensagem, seguido por até dois bytes de dados que contêm os parâmetros.<ref name="Brewster">Brewster, Stephen. "Nonspeech Auditory Output". ''The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies, and Emerging Applications''. Ed. Julie A. Jacko; Andrew Sears. Mahwah: Lawrence Erlbaum Associates, 2003. p.227</ref> Mensagens MIDI podem ser "mensagens do canal", os quais são enviados em apenas um dos 16 canais e só podem ser ouvidos por dispositivos que recebem nesse canal, ou "mensagens do sistema", que são ouvidas por todos os dispositivos. Quaisquer dados que não são relevantes para um dispositivo receptor são ignorados..<ref name="Gibbs">Gibbs, Jonathan (Rev. by Peter Howell) "Electronic Music". ''Sound Recording Practice'', 4th Ed. Ed. John Borwick. Oxford: Oxford University Press, 1996</ref>{{rp|384|date=Novembro de 2012}} Há cinco tipos de mensagens: ''de Voz do Canal (Channel Voice)'', ''de Modo do Canal (Channel Mode)'', ''Comum do Sistema (System Common)'', ''em Tempo Real do Sistema (System Real-Time)'', e ''Exclusiva do Sistema (System Exclusive)''.<ref>Hass, Jeffrey. "[http://www.indiana.edu/%7Eemusic/etext/MIDI/chapter3_MIDI3.shtml Chapter Three: How MIDI works 3]". Indiana University Jacobs School of Music. 2010. Web. 13 de agosto de 2012.</ref> |

|||

As ''mensagens de Voz do Canal'' transmitem dados de desempenho em tempo real sobre um único canal. Exemplos incluem mensagens "nota-on" que contêm um número de nota MIDI que especifica o tom da nota, um valor de velocidade que indica com que força a nota foi tocada, e o número do canal; mensagens "note-off" que finalizam uma nota; mensagens de alteração do programa que mudam um trecho do dispositivo; e as alterações de controle que permitem o ajuste dos parâmetros de um instrumento. As ''mensagens de Modo do Canal'' incluem mensagens on e off dos modo omni/mono/poli, assim como mensagens para resetar todos os controladores aos seus estados padrão ou para enviar mensagens "note-off" para todas as notas. As mensagens do sistema não incluem os números dos canais, e são recebidas por cada dispositivo na cadeia MIDI. Código de tempo MIDI é um exemplo de uma ''mensagem comum do sistema''. As ''mensagens em Tempo Real do Sistema'' fornecem a sincronização, e incluem o MIDI clock e o sensoriamento ativo.<ref name="Huber" />{{rp|18–35|date=November 2012}} |

|||

====Mensagens Exclusivas do Sistema (SysEx)==== |

|||

As ''mensagens Exclusivas do Sistema'' (SysEx) são uma das principais razões para a flexibilidade e longevidade do padrão MIDI. Elas permitem que os fabricantes criem mensagens exclusivas que fornecem controle sobre seus equipamentos de uma forma mais completa do que a fornecida pelas mensagens MIDI padrão.<ref name="Manning" />{{rp|287|date=Novembro de 2012}} As mensagens SysEx são transmitidas a um dispositivo específico em um sistema. Cada fabricante tem um identificador único que está incluído em suas mensagens SysEx, que ajuda a garantir que as mensagens serão recebidas apenas pelo dispositivo de destino, e ignoradas por todos os outros dispositivos. Muitos instrumentos também incluem uma configuração SysEx ID, que permite que dois dispositivos do mesmo modelo sejam endereçados independentemente enquanto conectado ao mesmo sistema.<ref>Hass, Jeffrey. "[http://www.indiana.edu/%7Eemusic/etext/MIDI/chapter3_MIDI9.shtml Chapter Three: How MIDI works 9]". Indiana University Jacobs School of Music. 2010. Web. 13 de agosto de 2012.</ref> |

|||

As mensagens SysEx podem incluir funcionalidades além das que são oferecidas pelo padrão MIDI. Elas são direcionados para um instrumento específico, e são ignoradas por todos os outros dispositivos no sistema. A estrutura é definida especificamente para o aparelho que irá recebê-la, podendo tal estrutura conter qualquer tipo de dado. Por exemplo, um dispositivo pode ter uma especificação de mensagens SysEx que contenham caracteres [[ASCII]].{{carece de fontes}} |

|||

====MIDI Implementation Chart==== |

|||

Os dispositivos normalmente não respondem a qualquer tipo de mensagem definida pela especificação MIDI. A Carta de Execução de MIDI (MIDI Implementation Chart) foi padronizada pela MMA como uma maneira para que os usuários vejam que recursos específicos um instrumento tem, e como ele responde as mensagens.<ref name="Huber" />{{rp|231|date=Novembro de 2012}} Uma Carta de Execução de MIDI específica normalmente é publicada para cada dispositivo MIDI dentro da documentação dispositivo. |

|||

==Extensões== |

|||

A flexibilidade e ampla adoção do MIDI têm levado a muitos refinamentos da norma, e permitiram a sua aplicação para fins além daqueles para o qual foi originalmente projetado. |

|||

===General MIDI=== |

|||

{{Artigo principal|[[General MIDI]]}} |

|||

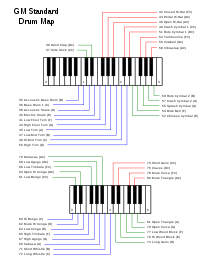

[[File:GM Standard Drum Map on the keyboard.svg|thumb|alt=GM Standard Drum Map on the keyboard|O GM Standard Drum Map, que especifica o som de percussão que uma determinada nota será desencadeada.]] |

|||

General MIDI ou GM é uma especificação para sintetizadores que impõe vários requisitos para além da norma MIDI mais geral. Enquanto que a norma MIDI proporciona um protocolo de comunicações que assegura que diferentes instrumentos (ou componentes) possam interagir a um nível básico (por ex., tocando uma nota num teclado MIDI vai fazer com que um módulo de som reproduza uma nota musical), o General MIDI vai mais além de duas maneiras: ele requer que todos os instrumentos compatíveis com o GM tenham um mínimo de especificações (tais como pelo menos 24 notas de polifonia) e associa certas interpretações a vários parâmetros e mensagens de controle que não tinham sido especificadas na norma MIDI (como a definição de sons de instrumentos para cada um dos 128 números dos programas).{{carece de fontes}} |

|||

<!-- |

|||