Usuário(a):Wcris~ptwiki/qft

Predefinição:Quantum field theory Quantum field theory or QFT[1] provides a theoretical framework for constructing quantum mechanical models of systems classically described by fields or of many-body systems. It is widely used in particle physics and condensed matter physics. Most theories in modern particle physics, including the Standard Model of elementary particles and their interactions, are formulated as relativistic quantum field theories. In condensed matter physics, quantum field theories are used in many circumstances, especially those where the number of particles is allowed to fluctuate—for example, in the BCS theory of superconductivity.

In quantum field theory (QFT) the forces between particles are mediated by other particles. The electromagnetic force between two electrons is caused by an exchange of photons. Intermediate vector bosons mediate the weak force and gluons mediate the strong force. There is currently no complete quantum theory of the remaining fundamental force, gravity, but many of the proposed theories postulate the existence of a graviton particle which mediates it. These force-carrying particles are virtual particles and, by definition, cannot be detected while carrying the force, because such detection will imply that the force is not being carried.

In QFT photons are not thought of as 'little billiard balls', they are considered to be field quanta - necessarily chunked ripples in a field that 'look like' particles. Fermions, like the electron, can also be described as ripples in a field, where each kind of fermion has its own field. In summary, the classical visualisation of "everything is particles and fields", in quantum field theory, resolves into "everything is particles", which then resolves into "everything is fields". In the end, particles are regarded as excited states of a field (field quanta).

History[editar | editar código-fonte]

Quantum field theory originated in the 1920s from the problem of creating a quantum mechanical theory of the electromagnetic field. In 1926, Max Born, Pascual Jordan, and Werner Heisenberg constructed such a theory by expressing the field's internal degrees of freedom as an infinite set of harmonic oscillators and by employing the usual procedure for quantizing those oscillators (canonical quantization). This theory assumed that no electric charges or currents were present and today would be called a free field theory. The first reasonably complete theory of quantum electrodynamics, which included both the electromagnetic field and electrically charged matter (specifically, electrons) as quantum mechanical objects, was created by Paul Dirac in 1927. This quantum field theory could be used to model important processes such as the emission of a photon by an electron dropping into a quantum state of lower energy, a process in which the number of particles changes — one atom in the initial state becomes an atom plus a photon in the final state. It is now understood that the ability to describe such processes is one of the most important features of quantum field theory.

It was evident from the beginning that a proper quantum treatment of the electromagnetic field had to somehow incorporate Einstein's relativity theory, which had after all grown out of the study of classical electromagnetism. This need to put together relativity and quantum mechanics was the second major motivation in the development of quantum field theory. Pascual Jordan and Wolfgang Pauli showed in 1928 that quantum fields could be made to behave in the way predicted by special relativity during coordinate transformations (specifically, they showed that the field commutators were Lorentz invariant), and in 1933 Niels Bohr and Leon Rosenfeld showed that this result could be interpreted as a limitation on the ability to measure fields at space-like separations, exactly as required by relativity. A further boost for quantum field theory came with the discovery of the Dirac equation, a single-particle equation obeying both relativity and quantum mechanics, when it was shown that several of its undesirable properties (such as negative-energy states) could be eliminated by reformulating the Dirac equation as a quantum field theory. This work was performed by Wendell Furry, Robert Oppenheimer, Vladimir Fock, and others.

The third thread in the development of quantum field theory was the need to handle the statistics of many-particle systems consistently and with ease. In 1927, Jordan tried to extend the canonical quantization of fields to the many-body wavefunctions of identical particles, a procedure that is sometimes called second quantization. In 1928, Jordan and Eugene Wigner found that the quantum field describing electrons, or other fermions, had to be expanded using anti-commuting creation and annihilation operators due to the Pauli exclusion principle. This thread of development was incorporated into many-body theory, and strongly influenced condensed matter physics and nuclear physics.

Despite its early successes, quantum field theory was plagued by several serious theoretical difficulties. Many seemingly-innocuous physical quantities, such as the energy shift of electron states due to the presence of the electromagnetic field, gave infinity — a nonsensical result — when computed using quantum field theory. This "divergence problem" was solved during the 1940s by Bethe, Tomonaga, Schwinger, Feynman, and Dyson, through the procedure known as renormalization. This phase of development culminated with the construction of the modern theory of quantum electrodynamics (QED). Beginning in the 1950s with the work of Yang and Mills, QED was generalized to a class of quantum field theories known as gauge theories. The 1960s and 1970s saw the formulation of a gauge theory now known as the Standard Model of particle physics, which describes all known elementary particles and the interactions between them. The weak interaction part of the standard model was formulated by Sheldon Glashow, with the Higgs mechanism added by Steven Weinberg and Abdus Salam. The theory was shown to be renormalizable and hence consistent by Gerardus 't Hooft and Martinus Veltman.

Also during the 1970s, parallel developments in the study of phase transitions in condensed matter physics led Leo Kadanoff, Michael Fisher and Kenneth Wilson (extending work of Ernst Stueckelberg, Andre Peterman, Murray Gell-Mann and Francis Low) to a set of ideas and methods known as the renormalization group. By providing a better physical understanding of the renormalization procedure invented in the 1940s, the renormalization group sparked what has been called the "grand synthesis" of theoretical physics, uniting the quantum field theoretical techniques used in particle physics and condensed matter physics into a single theoretical framework.

The study of quantum field theory is alive and flourishing, as are applications of this method to many physical problems. It remains one of the most vital areas of theoretical physics today, providing a common language to many branches of physics.

Principles of quantum field theory[editar | editar código-fonte]

Classical fields and quantum fields[editar | editar código-fonte]

Quantum mechanics, in its most general formulation, is a theory of abstract operators (observables) acting on an abstract state space (Hilbert space), where the observables represent physically-observable quantities and the state space represents the possible states of the system under study. Furthermore, each observable corresponds, in a technical sense, to the classical idea of a degree of freedom. For instance, the fundamental observables associated with the motion of a single quantum mechanical particle are the position and momentum operators and . Ordinary quantum mechanics deals with systems such as this, which possess a small set of degrees of freedom.

(It is important to note, at this point, that this article does not use the word "particle" in the context of wave–particle duality. In quantum field theory, "particle" is a generic term for any discrete quantum mechanical entity, such as an electron, which can behave like classical particles or classical waves under different experimental conditions.)

A quantum field is a quantum mechanical system containing a large, and possibly infinite, number of degrees of freedom. This is not as exotic a situation as one might think. A classical field contains a set of degrees of freedom at each point of space; for instance, the classical electromagnetic field defines two vectors — the electric field and the magnetic field — that can in principle take on distinct values for each position . When the field as a whole is considered as a quantum mechanical system, its observables form an infinite (in fact uncountable) set, because is continuous.

Furthermore, the degrees of freedom in a quantum field are arranged in "repeated" sets. For example, the degrees of freedom in an electromagnetic field can be grouped according to the position , with exactly two vectors for each . Note that is an ordinary number that "indexes" the observables; it is not to be confused with the position operator encountered in ordinary quantum mechanics, which is an observable. (Thus, ordinary quantum mechanics is sometimes referred to as "zero-dimensional quantum field theory", because it contains only a single set of observables.) It is also important to note that there is nothing special about because, as it turns out, there is generally more than one way of indexing the degrees of freedom in the field.

In the following sections, we will show how these ideas can be used to construct a quantum mechanical theory with the desired properties. We will begin by discussing single-particle quantum mechanics and the associated theory of many-particle quantum mechanics. Then, by finding a way to index the degrees of freedom in the many-particle problem, we will construct a quantum field and study its implications.

Single-particle and many-particle quantum mechanics[editar | editar código-fonte]

In ordinary quantum mechanics, the time-dependent Schrödinger equation describing the time evolution of the quantum state of a single non-relativistic particle is

where is the particle's mass, is the applied potential, and denotes the quantum state (we are using bra-ket notation).

We wish to consider how this problem generalizes to particles. There are two motivations for studying the many-particle problem. The first is a straightforward need in condensed matter physics, where typically the number of particles is on the order of Avogadro's number (6.0221415 x 1023). The second motivation for the many-particle problem arises from particle physics and the desire to incorporate the effects of special relativity. If one attempts to include the relativistic rest energy into the above equation, the result is either the Klein-Gordon equation or the Dirac equation. However, these equations have many unsatisfactory qualities; for instance, they possess energy eigenvalues which extend to –∞, so that there seems to be no easy definition of a ground state. It turns out that such inconsistencies arise from neglecting the possibility of dynamically creating or destroying particles, which is a crucial aspect of relativity. Einstein's famous mass-energy relation predicts that sufficiently massive particles can decay into several lighter particles, and sufficiently energetic particles can combine to form massive particles. For example, an electron and a positron can annihilate each other to create photons. Thus, a consistent relativistic quantum theory must be formulated as a many-particle theory.

Furthermore, we will assume that the particles are indistinguishable. As described in the article on identical particles, this implies that the state of the entire system must be either symmetric (bosons) or antisymmetric (fermions) when the coordinates of its constituent particles are exchanged. These multi-particle states are rather complicated to write. For example, the general quantum state of a system of bosons is written as

where are the single-particle states, is the number of particles occupying state , and the sum is taken over all possible permutations acting on elements. In general, this is a sum of ( factorial) distinct terms, which quickly becomes unmanageable as increases. The way to simplify this problem is to turn it into a quantum field theory.

Second quantization[editar | editar código-fonte]

In this section, we will describe a method for constructing a quantum field theory called second quantization. This basically involves choosing a way to index the quantum mechanical degrees of freedom in the space of multiple identical-particle states. It is based on the Hamiltonian formulation of quantum mechanics; several other approaches exist, such as the Feynman path integral[2], which uses a Lagrangian formulation. For an overview, see the article on quantization.

Second quantization of bosons[editar | editar código-fonte]

For simplicity, we will first discuss second quantization for bosons, which form perfectly symmetric quantum states. Let us denote the mutually orthogonal single-particle states by and so on. For example, the 3-particle state with one particle in state and two in state is

The first step in second quantization is to express such quantum states in terms of occupation numbers, by listing the number of particles occupying each of the single-particle states etc. This is simply another way of labelling the states. For instance, the above 3-particle state is denoted as

The next step is to expand the -particle state space to include the state spaces for all possible values of . This extended state space, known as a Fock space, is composed of the state space of a system with no particles (the so-called vacuum state), plus the state space of a 1-particle system, plus the state space of a 2-particle system, and so forth. It is easy to see that there is a one-to-one correspondence between the occupation number representation and valid boson states in the Fock space.

At this point, the quantum mechanical system has become a quantum field in the sense we described above. The field's elementary degrees of freedom are the occupation numbers, and each occupation number is indexed by a number , indicating which of the single-particle states it refers to.

The properties of this quantum field can be explored by defining creation and annihilation operators, which add and subtract particles. They are analogous to "ladder operators" in the quantum harmonic oscillator problem, which added and subtracted energy quanta. However, these operators literally create and annihilate particles of a given quantum state. The bosonic annihilation operator and creation operator have the following effects:

It can be shown that these are operators in the usual quantum mechanical sense, i.e. linear operators acting on the Fock space. Furthermore, they are indeed Hermitian conjugates, which justifies the way we have written them. They can be shown to obey the commutation relation

where stands for the Kronecker delta. These are precisely the relations obeyed by the ladder operators for an infinite set of independent quantum harmonic oscillators, one for each single-particle state. Adding or removing bosons from each state is therefore analogous to exciting or de-exciting a quantum of energy in a harmonic oscillator.

The Hamiltonian of the quantum field (which, through the Schrödinger equation, determines its dynamics) can be written in terms of creation and annihilation operators. For instance, the Hamiltonian of a field of free (non-interacting) bosons is

where is the energy of the -th single-particle energy eigenstate. Note that

Second quantization of fermions[editar | editar código-fonte]

It turns out that a different definition of creation and annihilation must be used for describing fermions. According to the Pauli exclusion principle, fermions cannot share quantum states, so their occupation numbers can only take on the value 0 or 1. The fermionic annihilation operators and creation operators are defined by

These obey an anticommutation relation:

One may notice from this that applying a fermionic creation operator twice gives zero, so it is impossible for the particles to share single-particle states, in accordance with the exclusion principle.

Field operators[editar | editar código-fonte]

We have previously mentioned that there can be more than one way of indexing the degrees of freedom in a quantum field. Second quantization indexes the field by enumerating the single-particle quantum states. However, as we have discussed, it is more natural to think about a "field", such as the electromagnetic field, as a set of degrees of freedom indexed by position.

To this end, we can define field operators that create or destroy a particle at a particular point in space. In particle physics, these operators turn out to be more convenient to work with, because they make it easier to formulate theories that satisfy the demands of relativity.

Single-particle states are usually enumerated in terms of their momenta (as in the particle in a box problem.) We can construct field operators by applying the Fourier transform to the creation and annihilation operators for these states. For example, the bosonic field annihilation operator is

The bosonic field operators obey the commutation relation

where stands for the Dirac delta function. As before, the fermionic relations are the same, with the commutators replaced by anticommutators.

It should be emphasized that the field operator is not the same thing as a single-particle wavefunction. The former is an operator acting on the Fock space, and the latter is just a scalar field. However, they are closely related, and are indeed commonly denoted with the same symbol. If we have a Hamiltonian with a space representation, say

where the indices and run over all particles, then the field theory Hamiltonian is

This looks remarkably like an expression for the expectation value of the energy, with playing the role of the wavefunction. This relationship between the field operators and wavefunctions makes it very easy to formulate field theories starting from space-projected Hamiltonians.

Implications of quantum field theory[editar | editar código-fonte]

Unification of fields and particles[editar | editar código-fonte]

The "second quantization" procedure that we have outlined in the previous section takes a set of single-particle quantum states as a starting point. Sometimes, it is impossible to define such single-particle states, and one must proceed directly to quantum field theory. For example, a quantum theory of the electromagnetic field must be a quantum field theory, because it is impossible (for various reasons) to define a wavefunction for a single photon. In such situations, the quantum field theory can be constructed by examining the mechanical properties of the classical field and guessing the corresponding quantum theory. The quantum field theories obtained in this way have the same properties as those obtained using second quantization, such as well-defined creation and annihilation operators obeying commutation or anticommutation relations.

Quantum field theory thus provides a unified framework for describing "field-like" objects (such as the electromagnetic field, whose excitations are photons) and "particle-like" objects (such as electrons, which are treated as excitations of an underlying electron field).

Physical meaning of particle indistinguishability[editar | editar código-fonte]

The second quantization procedure relies crucially on the particles being identical. We would not have been able to construct a quantum field theory from a distinguishable many-particle system, because there would have been no way of separating and indexing the degrees of freedom.

Many physicists prefer to take the converse interpretation, which is that quantum field theory explains what identical particles are. In ordinary quantum mechanics, there is not much theoretical motivation for using symmetric (bosonic) or antisymmetric (fermionic) states, and the need for such states is simply regarded as an empirical fact. From the point of view of quantum field theory, particles are identical if and only if they are excitations of the same underlying quantum field. Thus, the question "why are all electrons identical?" arises from mistakenly regarding individual electrons as fundamental objects, when in fact it is only the electron field that is fundamental.

Particle conservation and non-conservation[editar | editar código-fonte]

During second quantization, we started with a Hamiltonian and state space describing a fixed number of particles (), and ended with a Hamiltonian and state space for an arbitrary number of particles. Of course, in many common situations is an important and perfectly well-defined quantity, e.g. if we are describing a gas of atoms sealed in a box. From the point of view of quantum field theory, such situations are described by quantum states that are eigenstates of the number operator , which measures the total number of particles present. As with any quantum mechanical observable, is conserved if it commutes with the Hamiltonian. In that case, the quantum state is trapped in the -particle subspace of the total Fock space, and the situation could equally well be described by ordinary -particle quantum mechanics.

For example, we can see that the free-boson Hamiltonian described above conserves particle number. Whenever the Hamiltonian operates on a state, each particle destroyed by an annihilation operator is immediately put back by the creation operator .

On the other hand, it is possible, and indeed common, to encounter quantum states that are not eigenstates of , which do not have well-defined particle numbers. Such states are difficult or impossible to handle using ordinary quantum mechanics, but they can be easily described in quantum field theory as quantum superpositions of states having different values of . For example, suppose we have a bosonic field whose particles can be created or destroyed by interactions with a fermionic field. The Hamiltonian of the combined system would be given by the Hamiltonians of the free boson and free fermion fields, plus a "potential energy" term such as

- ,

where and denotes the bosonic creation and annihilation operators, and denotes the fermionic creation and annihilation operators, and is a parameter that describes the strength of the interaction. This "interaction term" describes processes in which a fermion in state either absorbs or emits a boson, thereby being kicked into a different eigenstate . (In fact, this type of Hamiltonian is used to describe interaction between conduction electrons and phonons in metals. The interaction between electrons and photons is treated in a similar way, but is a little more complicated because the role of spin must be taken into account.) One thing to notice here is that even if we start out with a fixed number of bosons, we will typically end up with a superposition of states with different numbers of bosons at later times. The number of fermions, however, is conserved in this case.

In condensed matter physics, states with ill-defined particle numbers are particularly important for describing the various superfluids. Many of the defining characteristics of a superfluid arise from the notion that its quantum state is a superposition of states with different particle numbers.

Axiomatic approaches[editar | editar código-fonte]

The preceding description of quantum field theory follows the spirit in which most physicists approach the subject. However, it is not mathematically rigorous. Over the past several decades, there have been many attempts to put quantum field theory on a firm mathematical footing by formulating a set of axioms for it. These attempts fall into two broad classes.

The first class of axioms, first proposed during the 1950s, include the Wightman, Osterwalder-Schrader, and Haag-Kastler systems. They attempted to formalize the physicists' notion of an "operator-valued field" within the context of functional analysis, and enjoyed limited success. It was possible to prove that any quantum field theory satisfying these axioms satisfied certain general theorems, such as the spin-statistics theorem and the CPT theorem. Unfortunately, it proved extraordinarily difficult to show that any realistic field theory, including the Standard Model, satisfied these axioms. Most of the theories that could be treated with these analytic axioms were physically trivial, being restricted to low-dimensions and lacking interesting dynamics. The construction of theories satisfying one of these sets of axioms falls in the field of constructive quantum field theory. Important work was done in this area in the 1970s by Segal, Glimm, Jaffe and others.

During the 1980s, a second set of axioms based on geometric ideas was proposed. This line of investigation, which restricts its attention to a particular class of quantum field theories known as topological quantum field theories, is associated most closely with Michael Atiyah and Graeme Segal, and was notably expanded upon by Edward Witten, Richard Borcherds, and Maxim Kontsevich. However, most physically-relevant quantum field theories, such as the Standard Model, are not topological quantum field theories; the quantum field theory of the fractional quantum Hall effect is a notable exception. The main impact of axiomatic topological quantum field theory has been on mathematics, with important applications in representation theory, algebraic topology, and differential geometry.

Finding the proper axioms for quantum field theory is still an open and difficult problem in mathematics. One of the Millennium Prize Problems—proving the existence of a mass gap in Yang-Mills theory—is linked to this issue.

Phenomena associated with quantum field theory[editar | editar código-fonte]

In the previous part of the article, we described the most general properties of quantum field theories. Some of the quantum field theories studied in various fields of theoretical physics possess additional special properties, such as renormalizability, gauge symmetry, and supersymmetry. These are described in the following sections.

Renormalization[editar | editar código-fonte]

Early in the history of quantum field theory, it was found that many seemingly innocuous calculations, such as the perturbative shift in the energy of an electron due to the presence of the electromagnetic field, give infinite results. The reason is that the perturbation theory for the shift in an energy involves a sum over all other energy levels, and there are infinitely many levels at short distances which each give a finite contribution.

Many of these problems are related to failures in classical electrodynamics that were identified but unsolved in the 19th century, and they basically stem from the fact that many of the supposedly "intrinsic" properties of an electron are tied to the electromagnetic field which it carries around with it. The energy carried by a single electron—its self energy—is not simply the bare value, but also includes the energy contained in its electromagnetic field, its attendant cloud of photons. The energy in a field of a spherical source diverges in both classical and quantum mechanics, but as discovered by Weisskopf, in quantum mechanics the divergence is much milder, going only as the logarithm of the radius of the sphere.

The solution to the problem, presciently suggested by Stueckelberg, independently by Bethe after the crucial experiment by Lamb, implemented at one loop by Schwinger, and systematically extended to all loops by Feynman and Dyson, with converging work by Tomonaga in isolated postwar Japan, is called renormalization. The technique of renormalization recognizes that the problem is essentially purely mathematical, that extremely short distances are at fault. In order to define a theory on a continuum, first place a cutoff on the fields, by postulating that quanta cannot have energies above some extremely high value. This has the effect of replacing continuous space by a structure where very short wavelengths do not exist, as on a lattice. Lattices break rotational symmetry, and one of the crucial contributions made by Feynman, Pauli and Villars, and modernized by 't Hooft and Veltman, is a symmetry preserving cutoff for perturbation theory. There is no known symmetrical cutoff outside of perturbation theory, so for rigorous or numerical work people often use an actual lattice.

The rule is that one computes physical quantities in terms of the observable parameters such as the physical mass, not the bare parameters such as the bare mass. The main point is not that of getting finite quantities (any regularization procedure does that), but to eliminate the regularization parameters by a suitable addition of counterterms to the original Lagrangian. The main requirements on the counterterms are a) Locality (polynomials in the fields and their derivatives) and b) Finiteness (number of monomials in the Lagrangian that remain finite after the introduction of all the necessary counterterms). The reason for (b) is that each new counterterm leaves behind a free parameter of the theory (like physical mass). There is no way such a parameter can be fixed other than by its experimental value, so one gets not a single theory but a family of theories parameterized by as many free parameters as the counterterms added to the Lagrangian. Since a theory with an infinite number of free parameters has virtually no predictive power the finiteness of the number of counterterms is required.

On a lattice, every quantity is finite but depends on the spacing. When taking the limit of zero spacing, we make sure that the physically-observable quantities like the observed electron mass stay fixed, which means that the constants in the Lagrangian defining the theory depend on the spacing. Hopefully, by allowing the constants to vary with the lattice spacing, all the results at long distances become insensitive to the lattice, defining a continuum limit.

The renormalization procedure only works for a certain class of quantum field theories, called renormalizable quantum field theories. A theory is perturbatively renormalizable when the constants in the Lagrangian only diverge at worst as logarithms of the lattice spacing for very short spacings. The continuum limit is then well defined in perturbation theory, and even if it is not fully well defined non-perturbatively, the problems only show up at distance scales which are exponentially small in the inverse coupling for weak couplings. The Standard Model of particle physics is perturbatively renormalizable, and so are its component theories (quantum electrodynamics/electroweak theory and quantum chromodynamics). Of the three components, quantum electrodynamics is believed to not have a continuum limit, while the asymptotically free SU(2) and SU(3) weak hypercharge and strong color interactions are nonperturbatively well defined.

The renormalization group describes how renormalizable theories emerge as the long distance low-energy effective field theory for any given high-energy theory. Because of this, renormalizable theories are insensitive to the precise nature of the underlying high-energy short-distance phenomena. This is a blessing because it allows physicists to formulate low energy theories without knowing the details of high energy phenomenon. It is also a curse, because once a renormalizable theory like the standard model is found to work, it gives very few clues to higher energy processes. The only way high energy processes can be seen in the standard model is when they allow otherwise forbidden events, or if they predict quantitative relations between the coupling constants.

Gauge freedom[editar | editar código-fonte]

A gauge theory is a theory that admits a symmetry with a local parameter. For example, in every quantum theory the global phase of the wave function is arbitrary and does not represent something physical. Consequently, the theory is invariant under a global change of phases (adding a constant to the phase of all wave functions, everywhere); this is a global symmetry. In quantum electrodynamics, the theory is also invariant under a local change of phase, that is - one may shift the phase of all wave functions so that the shift may be different at every point in space-time. This is a local symmetry. However, in order for a well-defined derivative operator to exist, one must introduce a new field, the gauge field, which also transforms in order for the local change of variables (the phase in our example) not to affect the derivative. In quantum electrodynamics this gauge field is the electromagnetic field. The change of local gauge of variables is termed gauge transformation.

In quantum field theory the excitations of fields represent particles. The particle associated with excitations of the gauge field is the gauge boson, which is the photon in the case of quantum electrodynamics.

The degrees of freedom in quantum field theory are local fluctuations of the fields. The existence of a gauge symmetry reduces the number of degrees of freedom, simply because some fluctuations of the fields can be transformed to zero by gauge transformations, so they are equivalent to having no fluctuations at all, and they therefore have no physical meaning. Such fluctuations are usually called "non-physical degrees of freedom" or gauge artifacts; usually some of them have a negative norm, making them inadequate for a consistent theory. Therefore, if a classical field theory has a gauge symmetry, then its quantized version (i.e. the corresponding quantum field theory) will have this symmetry as well. In other words, a gauge symmetry cannot have a quantum anomaly. If a gauge symmetry is anomalous (i.e. not kept in the quantum theory) then the theory is non-consistent: for example, in quantum electrodynamics, had there been a gauge anomaly, this would require the appearance of photons with longitudinal polarization and polarization in the time direction, the latter having a negative norm, rendering the theory inconsistent; another possibility would be for these photons to appear only in intermediate processes but not in the final products of any interaction, making the theory non unitary and again inconsistent (see optical theorem).

In general, the gauge transformations of a theory consist several different transformations, which may not be commutative. These transformations are together described by a mathematical object known as a gauge group. Infinitesimal gauge transformations are the gauge group generators. Therefore the number of gauge bosons is the group dimension (i.e. number of generators forming a basis).

All the fundamental interactions in nature are described by gauge theories. These are:

- Quantum electrodynamics, whose gauge transformation is a local change of phase, so that the gauge group is U(1). The gauge boson is the photon.

- Quantum chromodynamics, whose gauge group is SU(3). The gauge bosons are eight gluons.

- The electroweak Theory, whose gauge group is (a direct product of U(1) and SU(2)).

- Gravity, whose classical theory is general relativity, admits the equivalence principle which is a form of gauge symmetry.

Supersymmetry[editar | editar código-fonte]

Supersymmetry assumes that every fundamental fermion has a superpartner that is a boson and vice versa. It was introduced in order to solve the so-called Hierarchy Problem, that is, to explain why particles not protected by any symmetry (like the Higgs boson) do not receive radiative corrections to its mass driving it to the larger scales (GUT, Planck...). It was soon realized that supersymmetry has other interesting properties: its gauged version is an extension of general relativity (Supergravity), and it is a key ingredient for the consistency of string theory.

The way supersymmetry protects the hierarchies is the following: since for every particle there is a superpartner with the same mass, any loop in a radiative correction is cancelled by the loop corresponding to its superpartner, rendering the theory UV finite.

Since no superpartners have yet been observed, if supersymmetry exists it must be broken (through a so-called soft term, which breaks supersymmetry without ruining its helpful features). The simplest models of this breaking require that the energy of the superpartners not be too high; in these cases, supersymmetry is expected to be observed by experiments at the Large Hadron Collider.

See also[editar | editar código-fonte]

Notes[editar | editar código-fonte]

- ↑ Weinberg, S. Quantum Field Theory, Vols. I to III, 2000, Cambridge University Press: Cambridge, UK.

- ↑ Abraham Pais, Inward Bound: Of Matter and Forces in the Physical World ISBN 0198519974. Pais recounts how his astonishment at the rapidity with which Feynman could calculate using his method. Feynman's method is now part of the standard methods for physicists.

Suggested reading for the layman[editar | editar código-fonte]

- Gribbin, John ; Q is for Quantum: Particle Physics from A to Z, Weidenfeld & Nicolson (1998) [ISBN 0297817523] [1]

- Feynman, Richard ; The Character of Physical Law [2]

- Feynman, Richard ; QED [3]

Suggested reading[editar | editar código-fonte]

- Wilczek, Frank ; Quantum Field Theory, Review of Modern Physics 71 (1999) S85-S95. Review article written by a master of Q.C.D., Nobel laureate 2003. Full text available at : hep-th/9803075

- Ryder, Lewis H. ; Quantum Field Theory (Cambridge University Press, 1985), ISBN 0-521-33859-X. Introduction to relativistic Q.F.T. for particle physics.

- Zee, Anthony ; Quantum Field Theory in a Nutshell, Princeton University Press (2003) ISBN 0-691-01019-6.

- Peskin, M and Schroeder, D. ;An Introduction to Quantum Field Theory (Westview Press, 1995) ISBN 0-201-50397-2

- Weinberg, Steven ; The Quantum Theory of Fields (3 volumes) Cambridge University Press (1995). A monumental treatise on Q.F.T. written by a leading expert, Nobel laureate 1979.

- Loudon, Rodney ; The Quantum Theory of Light (Oxford University Press, 1983), ISBN 0-19-851155-8

- Greiner, Walter and Müller, Berndt (2000). Gauge Theory of Weak Interactions. [S.l.]: Springer. ISBN 3-540-67672-4

- Paul H. Frampton, Gauge Field Theories, Frontiers in Physics, Addison-Wesley (1986), Second Edition, Wiley (2000).

- Gordon L. Kane (1987). Modern Elementary Particle Physics. [S.l.]: Perseus Books. ISBN 0-201-11749-5

- Yndurain, Francisco Jose; Relativistic Quantum Mechanics and Introduction to Field Theory ( Springer, 1edition 1996), ISBN 978-3540604532

- Kleinert, Hagen and Verena Schulte-Frohlinde, Critical Properties of φ4-Theories, World Scientific (Singapur, 2001); Paperback ISBN 981-02-4658-7 (also available online)

- Kleinert, Hagen, Multivalued Fields in in Condensed Matter, Electrodynamics, and Gravitation, World Scientific (Singapore, 2008) (also available online)

- Itzykson, Claude and Zuber, Jean Bernard (1980). Quantum Field Theory. [S.l.]: McGraw-Hill International Book Co.,. ISBN 0-07-032071-3

- Bogoliubov, Nikolay, Shirkov, Dmitry (1982). Quantum Fields. [S.l.]: Benjamin-Cummings Pub. Co. ISBN 0805309837

Advanced reading[editar | editar código-fonte]

- N. N. Bogoliubov, A. A. Logunov, A. I. Oksak, I. T. Todorov (1990): General Principles of Quantum Field Theory. Dordrecht; Boston, Kluwer Academic Publishers. ISBN 079230540X. ISBN 978-0792305408.

External links[editar | editar código-fonte]

- Siegel, Warren ; Fields (also available from arXiv:hep-th/9912205)

- 't Hooft, Gerard ; The Conceptual Basis of Quantum Field Theory, Handbook of the Philosophy of Science, Elsevier (to be published). Review article written by a master of gauge theories, Nobel laureate 1999. Full text available in here.

- Srednicki, Mark ; Quantum Field Theory

- Kuhlmann, Meinard ; Quantum Field Theory, Stanford Encyclopedia of Philosophy

- Quantum field theory textbooks: a list with links to amazon.com

- Pedagogic Aids to Quantum Field Theory. Click on the link "Introduction" for a simplified introduction to QFT suitable for someone familiar with quantum mechanics.

Predefinição:Physics-footer Predefinição:TOE-nav

Category:Quantum mechanics Category:Mathematical physics Category:Fundamental physics concepts pt:Teoria quântica de campos

Predefinição:Quantum field theory

Quantum electrodynamics (QED) is a relativistic quantum field theory of electrodynamics. QED was developed by a number of physicists, beginning in the late 1920s. It basically describes how light and matter interact. More specifically it deals with the interactions between electrons, positrons and photons. QED mathematically describes all phenomena involving electrically charged particles interacting by means of exchange of photons. It has been called "the jewel of physics" for its extremely accurate predictions of quantities like the anomalous magnetic moment of the electron, and the Lamb shift of the energy levels of hydrogen.[1]

In technical terms, QED can be described as a perturbation theory of the electromagnetic quantum vacuum.

History[editar | editar código-fonte]

The word 'quantum' is Latin, meaning "how much" (neut. sing. of quantus "how great").[2] The word 'electrodynamics' was coined by André-Marie Ampère in 1822.[3] The word 'quantum', as used in physics, i.e. with reference to the notion of count, was first used by Max Planck, in 1900 and reinforced by Einstein in 1905 with his use of the term light quanta.

Quantum theory began in 1900, when Max Planck assumed that energy is quantized in order to derive a formula predicting the observed frequency dependence of the energy emitted by a black body. This dependence is completely at variance with classical physics. In 1905, Einstein explained the photoelectric effect by postulating that light energy comes in quanta later called photons. In 1913, Bohr invoked quantization in his proposed explanation of the spectral lines of the hydrogen atom. In 1924, Louis de Broglie proposed a quantum theory of the wave-like nature of subatomic particles. The phrase "quantum physics" was first employed in Johnston's Planck's Universe in Light of Modern Physics. These theories, while they fit the experimental facts to some extent, were strictly phenomenological: they provided no rigorous justification for the quantization they employed.

Modern quantum mechanics was born in 1925 with Werner Heisenberg's matrix mechanics and Erwin Schrödinger's wave mechanics and the Schrödinger equation, which was a non-relativistic generalization of de Broglie's(1925) relativistic approach. Schrödinger subsequently showed that these two approaches were equivalent. In 1927, Heisenberg formulated his uncertainty principle, and the Copenhagen interpretation of quantum mechanics began to take shape. Around this time, Paul Dirac, in work culminating in his 1930 monograph finally joined quantum mechanics and special relativity, pioneered the use of operator theory, and devised the bra-ket notation widely used since. In 1932, John von Neumann formulated the rigorous mathematical basis for quantum mechanics as the theory of linear operators on Hilbert spaces. This and other work from the founding period remains valid and widely used.

Quantum chemistry began with Walter Heitler and Fritz London's 1927 quantum account of the covalent bond of the hydrogen molecule. Linus Pauling and others contributed to the subsequent development of quantum chemistry.

The application of quantum mechanics to fields rather than single particles, resulting in what are known as quantum field theories, began in 1927. Early contributors included Dirac, Wolfgang Pauli, Weisskopf, and Jordan. This line of research culminated in the 1940s in the quantum electrodynamics (QED) of Richard Feynman, Freeman Dyson, Julian Schwinger, and Sin-Itiro Tomonaga, for which Feynman, Schwinger and Tomonaga received the 1965 Nobel Prize in Physics. QED, a quantum theory of electrons, positrons, and the electromagnetic field, was the first satisfactory quantum description of a physical field and of the creation and annihilation of quantum particles.

QED involves a covariant and gauge invariant prescription for the calculation of observable quantities. Feynman's mathematical technique, based on his diagrams, initially seemed very different from the field-theoretic, operator-based approach of Schwinger and Tomonaga, but Freeman Dyson later showed that the two approaches were equivalent. The renormalization procedure for eliminating the awkward infinite predictions of quantum field theory was first implemented in QED. Even though renormalization works very well in practice, Feynman was never entirely comfortable with its mathematical validity, even referring to renormalization as a "shell game" and "hocus pocus". (Feynman, 1985: 128)

QED has served as the model and template for all subsequent quantum field theories. One such subsequent theory is quantum chromodynamics, which began in the early 1960s and attained its present form in the 1975 work by H. David Politzer, Sidney Coleman, David Gross and Frank Wilczek. Building on the pioneering work of Schwinger, Peter Higgs, Goldstone, and others, Sheldon Glashow, Steven Weinberg and Abdus Salam independently showed how the weak nuclear force and quantum electrodynamics could be merged into a single electroweak force.

Physical interpretation of QED[editar | editar código-fonte]

In classical optics, light travels over all allowed paths and their interference results in Fermat's principle. Similarly, in QED, light (or any other particle like an electron or a proton) passes over every possible path allowed by apertures or lenses. The observer (at a particular location) simply detects the mathematical result of all wave functions added up, as a sum of all line integrals. For other interpretations, paths are viewed as non physical, mathematical constructs that are equivalent to other, possibly infinite, sets of mathematical expansions. According to QED, lightPredefinição:Dubious can go slower or faster than c, but will travel at velocity c on average[4].

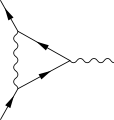

Physically, QED describes charged particles (and their antiparticles) interacting with each other by the exchange of photons. The magnitude of these interactions can be computed using perturbation theory; these rather complex formulas have a remarkable pictorial representation as Feynman diagrams. QED was the theory to which Feynman diagrams were first applied. These diagrams were invented on the basis of Lagrangian mechanics. Using a Feynman diagram, one decides every possible path between the start and end points. Each path is assigned a complex-valued probability amplitude, and the actual amplitude we observe is the sum of all amplitudes over all possible paths. The paths with stationary phase contribute most (due to lack of destructive interference with some neighboring counter-phase paths) — this results in the stationary classical path between the two points.

QED doesn't predict what will happen in an experiment, but it can predict the probability of what will happen in an experiment, which is how (statistically) it is experimentally verified. Predictions of QED agree with experiments to an extremely high degree of accuracy: currently about 10−12 (and limited by experimental errors); for details see precision tests of QED. This makes QED one of the most accurate physical theories constructed thus far.

Near the end of his life, Richard P. Feynman gave a series of lectures on QED intended for the lay public. These lectures were transcribed and published as Feynman (1985), QED: The strange theory of light and matter, a classic non-mathematical exposition of QED from the point of view articulated above.

Mathematics[editar | editar código-fonte]

Mathematically, QED is an abelian gauge theory with the symmetry group U(1). The gauge field, which mediates the interaction between the charged spin-1/2 fields, is the electromagnetic field. The QED Lagrangian for a spin-1/2 field interacting with the electromagnetic field is given by the real part of

- where

- are Dirac matrices;

- a bispinor field of spin-1/2 particles (e.g. electron-positron field);

- , called "psi-bar", is sometimes referred to as Dirac adjoint;

- is the gauge covariant derivative;

- is the coupling constant, equal to the electric charge of the bispinor field;

- is the covariant four-potential of the electromagnetic field generated by electron itself;

- is the external field imposed by external source;

- is the electromagnetic field tensor.

Euler-Lagrange equations[editar | editar código-fonte]

To begin, substituting the definition of D into the Lagrangian gives us:

Next, we can substitute this Lagrangian into the Euler-Lagrange equation of motion for a field:

to find the field equations for QED.

The two terms from this Lagrangian are then:

Substituting these two back into the Euler-Lagrange equation (2) results in:

with complex conjugate:

Bringing the middle term to the right-hand side transforms this second equation into:

The left-hand side is like the original Dirac equation and the right-hand side is the interaction with the electromagnetic field.

One further important equation can be found by substituting the Lagrangian into another Euler-Lagrange equation, this time for the field, :

The two terms this time are:

and these two terms, when substituted back into (3) give us:

Using perturbation theory, we could divide result into different parts according to the order of electric charge :

here we use instead of to avoid confusion between electric charge and natural logarithm

The zeroth order result is:

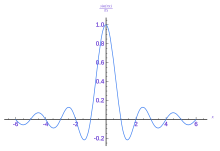

is the 3-dimension momentum space expression of wave function:

The 1st order result (ignore the self energy )is:

The term is the external field in 4-dimension momentum space:

The solution of can be achieved in the same way(using Lorentz gauge ):

in which:

In pictures[editar | editar código-fonte]

Predefinição:Expand The part of the Lagrangian containing the electromagnetic field tensor describes the free evolution of the electromagnetic field, whereas the Dirac-like equation with the gauge covariant derivative describes the free evolution of the electron and positron fields as well as their interaction with the electromagnetic field.

-

The one-loop contribution to the vacuum polarization function

-

The one-loop contribution to the electron self-energy function

-

The one-loop contribution to the vertex function

See also[editar | editar código-fonte]

References[editar | editar código-fonte]

- ↑ Feynman, Richard (1985). «Chapter 1». QED: The Strange Theory of Light and Matter. [S.l.]: Princeton University Press. p. 6

- ↑ Online Etymology Dictionary

- ↑ Grandy, W.T. (2001). Relativistic Quantum Mechanics of Leptons and Fields, Springer.

- ↑ Richard P. Feynman QED:(QED (book)) p89-90 "the light has an amplitude to go faster or slower than the speed c, but these amplitudes cancel each other out over long distances"; see also accompanying text

Further reading[editar | editar código-fonte]

Books[editar | editar código-fonte]

- Feynman, Richard Phillips (1998). Quantum Electrodynamics. [S.l.]: Westview Press; New Ed edition. ISBN 978-0201360752

- Tannoudji-Cohen, Claude; Dupont-Roc, Jacques, and Grynberg, Gilbert (1997). Photons and Atoms: Introduction to Quantum Electrodynamics. [S.l.]: Wiley-Interscience. ISBN 978-0471184331

- De Broglie, Louis (1925). Recherches sur la theorie des quanta [Research on quantum theory]. France: Wiley-Interscience

- Jauch, J.M.; Rohrlich, F. (1980). The Theory of Photons and Electrons. [S.l.]: Springer-Verlag. ISBN 978-0387072951

- Miller, Arthur I. (1995). Early Quantum Electrodynamics : A Sourcebook. [S.l.]: Cambridge University Press. ISBN 978-0521568913

- Schweber, Silvian, S. (1994). QED and the Men Who Made It. [S.l.]: Princeton University Press. ISBN 978-0691033273

- Schwinger, Julian (1958). Selected Papers on Quantum Electrodynamics. [S.l.]: Dover Publications. ISBN 978-0486604442

- Greiner, Walter; Bromley, D.A.,Müller, Berndt. (2000). Gauge Theory of Weak Interactions. [S.l.]: Springer. ISBN 978-3540676720

- Kane, Gordon, L. (1993). Modern Elementary Particle Physics. [S.l.]: Westview Press. ISBN 978-0201624601

- Peter W. Milonni: The quantum vacuum - an introduction to quantum electrodynamics. Acad. Press, San Diego 1994, ISBN 0-12-498080-5

Journals[editar | editar código-fonte]

- J.M. Dudley and A.M. Kwan, "Richard Feynman's popular lectures on quantum electrodynamics: The 1979 Robb Lectures at Auckland University," American Journal of Physics Vol. 64 (June 1996) 694-698.

Challenged by Utan Skriboa, Nrahif Sansbah and Sarah Carpenter, Jacobs School of Engineering via University of California San Diego, (2003)

External links[editar | editar código-fonte]

- Feynman's Nobel Prize lecture describing the evolution of QED and his role in it

- Feynman's New Zealand lectures on QED for non-physicists

Predefinição:QED Predefinição:Quantum field theories

Category:Quantum electrodynamics Category:Quantum electronics Category:Electrodynamics Category:Particle physics Category:Quantum field theory Category:Fundamental physics concepts pt:Eletrodinâmica quântica

Predefinição:Quantum mechanics

In quantum physics, the Heisenberg uncertainty principle states that certain pairs of physical properties, like position and momentum, cannot both be known to arbitrary precision. That is, the more precisely one property is known, the less precisely the other can be known. It is impossible to measure simultaneously both position and velocity of a microscopic particle with any degree of accuracy or certainty. This is not a statement about the limitations of a researcher's ability to measure particular quantities of a system, but rather about the nature of the system itself and hence it expresses a property of the universe.

In quantum mechanics, a particle is described by a wave. The position is where the wave is concentrated and the momentum is the wavelength. The position is uncertain to the degree that the wave is spread out, and the momentum is uncertain to the degree that the wavelength is ill-defined.

The only kind of wave with a definite position is concentrated at one point, and such a wave has an indefinite wavelength. Conversely, the only kind of wave with a definite wavelength is an infinite regular periodic oscillation over all space, which has no definite position. So in quantum mechanics, there are no states that describe a particle with both a definite position and a definite momentum. The more precise the position, the less precise the momentum.

The uncertainty principle can be restated in terms of measurements, which involves collapse of the wavefunction. When the position is measured, the wavefunction collapses to a narrow bump near the measured value, and the momentum wavefunction becomes spread out. The particle's momentum is left uncertain by an amount inversely proportional to the accuracy of the position measurement. The amount of left-over uncertainty can never be reduced below the limit set by the uncertainty principle, no matter what the measurement process.

This means that the uncertainty principle is related to the observer effect, with which it is often conflated. The uncertainty principle sets a lower limit to how small the momentum disturbance in an accurate position experiment can be, and vice versa for momentum experiments.

A mathematical statement of the principle is that every quantum state has the property that the root-mean-square (RMS) deviation of the position from its mean (the standard deviation of the X-distribution):

times the RMS deviation of the momentum from its mean (the standard deviation of P):

can never be smaller than a fixed fraction of Planck's constant:

Any measurement of the position with accuracy collapses the quantum state making the standard deviation of the momentum larger than .

Historical introduction[editar | editar código-fonte]

Werner Heisenberg formulated the uncertainty principle in Niels Bohr's institute at Copenhagen, while working on the mathematical foundations of quantum mechanics.

In 1925, following pioneering work with Hendrik Kramers, Heisenberg developed matrix mechanics, which replaced the ad-hoc old quantum theory with modern quantum mechanics. The central assumption was that the classical motion was not precise at the quantum level, and electrons in an atom did not travel on sharply defined orbits. Rather, the motion was smeared out in a strange way: the time Fourier transform only involving those frequencies that could be seen in quantum jumps.

Heisenberg's paper did not admit any unobservable quantities like the exact position of the electron in an orbit at any time; he only allowed the theorist to talk about the Fourier components of the motion. Since the Fourier components were not defined at the classical frequencies, they could not be used to construct an exact trajectory, so that the formalism could not answer certain overly precise questions about where the electron was or how fast it was going.

The most striking property of Heisenberg's infinite matrices for the position and momentum is that they do not commute. His central result was the canonical commutation relation:

and this result does not have a clear physical interpretation.

In March 1926, working in Bohr's institute, Heisenberg formulated the principle of uncertainty thereby laying the foundation of what became known as the Copenhagen interpretation of quantum mechanics. Heisenberg showed that the commutation relations implies an uncertainty, or in Bohr's language a complementarity. Any two variables that do not commute cannot be measured simultaneously—the more precisely one is known, the less precisely the other can be known.

One way to understand the complementarity between position and momentum is by wave-particle duality. If a particle described by a plane wave passes through a narrow slit in a wall like a water-wave passing through a narrow channel, the particle diffracts and its wave comes out in a range of angles. The narrower the slit, the wider the diffracted wave and the greater the uncertainty in momentum afterwards. The laws of diffraction require that the spread in angle is about , where is the slit width and is the wavelength. From the de Broglie relation, the size of the slit and the range in momentum of the diffracted wave are related by Heisenberg's rule:

In his celebrated paper (1927), Heisenberg established this expression as the minimum amount of unavoidable momentum disturbance caused by any position measurement[1], but he did not give a precise definition for the uncertainties Δx and Δp. Instead, he gave some plausible estimates in each case separately. In his Chicago lecture[2] he refined his principle:

|

|

| But it was Kennard[3] in 1927 who first proved the modern inequality: | |

|

|

where , and σx, σp are the standard deviations of position and momentum. Heisenberg himself only proved relation (2) for the special case of Gaussian states.[2].

Uncertainty principle and observer effect[editar | editar código-fonte]

The uncertainty principle is often explained as the statement that the measurement of position necessarily disturbs a particle's momentum, and vice versa—i.e., that the uncertainty principle is a manifestation of the observer effect.

This common explanation is incorrect, because the uncertainty principle is not caused by observer-effect measurement disturbance. For example, sometimes the measurement can be performed far away in ways which cannot possibly "disturb" the particle in any classical sense. But the distant measurement (of momentum for instance) still causes the waveform to collapse and make determination (of position for instance) impossible. This queer mechanism of quantum mechanics is the basis of quantum cryptography, where the measurement of a value on one of two entangled particles at one location forces, via the uncertainty principle, a property of a distant particle to become indeterminate and hence unmeasurable. If two photons are emitted in opposite directions from the decay of positronium, the momenta of the two photons are opposite. By measuring the momentum of one particle, the momentum of the other is determined, making its position indeterminate. This case is subtler, because it is impossible to introduce more uncertainties by measuring a distant particle, but it is possible to restrict the uncertainties in different ways, with different statistical properties, depending on what property of the distant particle you choose to measure. By restricting the uncertainty in p to be very small by a distant measurement, the remaining uncertainty in x stays large. (This example was actually the basis of Albert Einstein's important suggestion of the EPR paradox in 1935.)

This disturbance explanation is also incorrect because it makes it seem that the disturbances are somehow conceptually avoidable — that there are states of the particle with definite position and momentum, but the experimental devices we have could never be good enough to produce those states. In fact, states with both definite position and momentum just do not exist in quantum mechanics, so it is not the measurement equipment that is at fault.

It is also misleading in another way, because sometimes it is a failure to measure the particle that produces the disturbance. For example, if a perfect photographic film contains a small hole, and an incident photon is not observed, then its momentum becomes uncertain by a large amount. By not observing the photon, we discover indirectly that it went through the hole, revealing the photon's position.

But Heisenberg did not focus on the mathematics of quantum mechanics, he was primarily concerned with establishing that the uncertainty is actually a property of the world — that it is in fact physically impossible to measure the position and momentum of a particle to a precision better than that allowed by quantum mechanics. To do this, he used physical arguments based on the existence of quanta, but not the full quantum mechanical formalism.

This was a surprising prediction of quantum mechanics, and not yet accepted. Many people would have considered it a flaw that there are no states of definite position and momentum. Heisenberg was trying to show this was not a bug, but a feature—a deep, surprising aspect of the universe. To do this, he could not just use the mathematical formalism, because it was the mathematical formalism itself that he was trying to justify.

Heisenberg's microscope[editar | editar código-fonte]

One way in which Heisenberg originally argued for the uncertainty principle is by using an imaginary microscope as a measuring device.[2] He imagines an experimenter trying to measure the position and momentum of an electron by shooting a photon at it.

If the photon has a short wavelength, and therefore a large momentum, the position can be measured accurately. But the photon scatters in a random direction, transferring a large and uncertain amount of momentum to the electron. If the photon has a long wavelength and low momentum, the collision doesn't disturb the electron's momentum very much, but the scattering will reveal its position only vaguely.

If a large aperture is used for the microscope, the electron's location can be well resolved (see Rayleigh criterion); but by the principle of conservation of momentum, the transverse momentum of the incoming photon and hence the new momentum of the electron resolves poorly. If a small aperture is used, the accuracy of the two resolutions is the other way around.

The trade-offs imply that no matter what photon wavelength and aperture size are used, the product of the uncertainty in measured position and measured momentum is greater than or equal to a lower bound, which is up to a small numerical factor equal to Planck's constant.[4] Heisenberg did not care to formulate the uncertainty principle as an exact bound, and preferred to use it as a heuristic quantitative statement, correct up to small numerical factors.

Critical reactions[editar | editar código-fonte]

The Copenhagen interpretation of quantum mechanics and Heisenberg's Uncertainty Principle were in fact seen as twin targets by detractors who believed in an underlying determinism and realism. Within the Copenhagen interpretation of quantum mechanics, there is no fundamental reality the quantum state describes, just a prescription for calculating experimental results. There is no way to say what the state of a system fundamentally is, only what the result of observations might be.

Albert Einstein believed that randomness is a reflection of our ignorance of some fundamental property of reality, while Niels Bohr believed that the probability distributions are fundamental and irreducible, and depend on which measurements we choose to perform. Einstein and Bohr debated the uncertainty principle for many years.

Einstein's slit[editar | editar código-fonte]

The first of Einstein's thought experiments challenging the uncertainty principle went as follows:

- Consider a particle passing through a slit of width d. The slit introduces an uncertainty in momentum of approximately h/d because the particle passes through the wall. But let us determine the momentum of the particle by measuring the recoil of the wall. In doing so, we find the momentum of the particle to arbitrary accuracy by conservation of momentum.

Bohr's response was that the wall is quantum mechanical as well, and that to measure the recoil to accuracy the momentum of the wall must be known to this accuracy before the particle passes through. This introduces an uncertainty in the position of the wall and therefore the position of the slit equal to , and if the wall's momentum is known precisely enough to measure the recoil, the slit's position is uncertain enough to disallow a position measurement.

A similar analysis with particles diffracting through multiple slits is given by Richard Feynman[5].

Einstein's box[editar | editar código-fonte]

Another of Einstein's thought experiments was designed to challenge the time/energy uncertainty principle. It is very similar to the slit experiment in space, except here the narrow window the particle passes through is in time:

- Consider a box filled with light. The box has a shutter that a clock opens and quickly closes at a precise time, and some of the light escapes. We can set the clock so that the time that the energy escapes is known. To measure the amount of energy that leaves, Einstein proposed weighing the box just after the emission. The missing energy lessens the weight of the box. If the box is mounted on a scale, it is naively possible to adjust the parameters so that the uncertainty principle is violated.

Bohr spent a day considering this setup, but eventually realized that if the energy of the box is precisely known, the time the shutter opens at is uncertain. If the case, scale, and box are in a gravitational field then, in some cases, it is the uncertainty of the position of the clock in the gravitational field that alter the ticking rate. This can introduce the right amount of uncertainty. This was ironic, because it was Einstein himself who first discovered gravity's effect on clocks.

EPR measurements[editar | editar código-fonte]

Bohr was compelled to modify his understanding of the uncertainty principle after another thought experiment by Einstein. In 1935, Einstein, Podolski and Rosen (see EPR paradox) published an analysis of widely separated entangled particles. Measuring one particle, Einstein realized, would alter the probability distribution of the other, yet here the other particle could not possibly be disturbed. This example led Bohr to revise his understanding of the principle, concluding that the uncertainty was not caused by a direct interaction.[6]

But Einstein came to much more far-reaching conclusions from the same thought experiment. He believed as "natural basic assumption" that a complete description of reality would have to predict the results of experiments from "locally changing deterministic quantities", and therefore would have to include more information than the maximum possible allowed by the uncertainty principle.

In 1964 John Bell showed that this assumption can be falsified, since it would imply a certain inequality between the probability of different experiments. Experimental results confirm the predictions of quantum mechanics, ruling out Einstein's basic assumption that led him to the suggestion of his hidden variables. (Ironically this is one of the best examples for Karl Popper's philosophy of invalidation of a theory by falsification-experiments, i.e. here Einstein's "basic assumption" became falsified by experiments based on Bells inequalities; for the objections of Karl Popper against the Heisenberg inequality itself, see below.)

While it is possible to assume that quantum mechanical predictions are due to nonlocal hidden variables, and in fact David Bohm invented such a formulation, this is not a satisfactory resolution for the vast majority of physicists. The question of whether a random outcome is predetermined by a nonlocal theory can be philosophical, and potentially intractable. If the hidden variables are not constrained, they could just be a list of random digits that are used to produce the measurement outcomes. To make it sensible, the assumption of nonlocal hidden variables is sometimes augmented by a second assumption — that the size of the observable universe puts a limit on the computations that these variables can do. A nonlocal theory of this sort predicts that a quantum computer encounters fundamental obstacles when it tries to factor numbers of approximately 10,000 digits or more, an achievable task in quantum mechanics[7].

Popper's criticism[editar | editar código-fonte]

Karl Popper criticized Heisenberg's form of the uncertainty principle, that a measurement of position disturbs the momentum, based on the following observation: if a particle with definite momentum passes through a narrow slit, the diffracted wave has some amplitude to go in the original direction of motion. If the momentum of the particle is measured after it goes through the slit, there is always some probability, however small, that the momentum will be the same as it was before.

Popper thinks of these rare events as falsifications of the uncertainty principle in Heisenberg's original formulation. To preserve the principle, he concludes that Heisenberg's relation does not apply to individual particles or measurements, but only to many identically prepared particles, called ensembles. Popper's criticism applies to nearly all probabilistic theories, since a probabilistic statement requires many measurements to either verify or falsify.

Popper's criticism does not trouble physicists who subscribe to Copenhagen interpretation. Popper's presumption is that the measurement is revealing some preexisting information about the particle, the momentum, which the particle already possesses. According to Copenhagen interpretation the quantum mechanical description the wavefunction is not a reflection of ignorance about the values of some more fundamental quantities, it is the complete description of the state of the particle. In this philosophical view, Popper's example is not a falsification, since after the particle diffracts through the slit and before the momentum is measured, the wavefunction is changed so that the momentum is still as uncertain as the principle demands.

Refinements[editar | editar código-fonte]

Entropic uncertainty principle[editar | editar código-fonte]

While formulating the many-worlds interpretation of quantum mechanics in 1957, Hugh Everett III discovered a much stronger formulation of the uncertainty principle[8]. In the inequality of standard deviations, some states, like the wavefunction

have a large standard deviation of position, but are actually a superposition of a small number of very narrow bumps. In this case, the momentum uncertainty is much larger than the standard deviation inequality would suggest. A better inequality uses the Shannon information content of the distribution, a measure of the number of bits learned when a random variable described by a probability distribution has a certain value.

The interpretation of I is that the number of bits of information an observer acquires when the value of x is given to accuracy is equal to . The second part is just the number of bits past the decimal point, the first part is a logarithmic measure of the width of the distribution. For a uniform distribution of width the information content is . This quantity can be negative, which means that the distribution is narrower than one unit, so that learning the first few bits past the decimal point gives no information since they are not uncertain.

Taking the logarithm of Heisenberg's formulation of uncertainty in natural units.

but the lower bound is not precise.

Everett (and Hirschman[9]) conjectured that for all quantum states:

This was proven by Beckner in 1975[10].

Derivations[editar | editar código-fonte]

When linear operators A and B act on a function , they don't always commute. A clear example is when operator B multiplies by x, while operator A takes the derivative with respect to x. Then

which in operator language means that

This example is important, because it is very close to the canonical commutation relation of quantum mechanics. There, the position operator multiplies the value of the wavefunction by x, while the corresponding momentum operator differentiates and multiplies by , so that:

It is the nonzero commutator that implies the uncertainty.

For any two operators A and B:

which is a statement of the Cauchy-Schwarz inequality for the inner product of the two vectors and . The expectation value of the product AB is greater than the magnitude of its imaginary part:

and putting the two inequalities together for Hermitian operators gives a form of the Robertson-Schrödinger relation:

and the uncertainty principle is a special case.

Physical interpretation[editar | editar código-fonte]

The inequality above acquires its physical interpretation:

where

is the mean of observable X in the state ψ and

is the standard deviation of observable X in the system state ψ.

By substituting for A and for B in the general operator norm inequality, since the imaginary part of the product, the commutator, is unaffected by the shift:

The big side of the inequality is the product of the norms of and , which in quantum mechanics are the standard deviations of A and B. The small side is the norm of the commutator, which for the position and momentum is just .

Matrix mechanics[editar | editar código-fonte]

In matrix mechanics, the commutator of the matrices X and P is always nonzero, it is a constant multiple of the identity matrix. This means that it is impossible for a state to have a definite values x for X and p for P, since then XP would be equal to the number xp and would equal PX.

The commutator of two matrices is unchanged when they are shifted by a constant multiple of the identity — for any two real numbers x and p

Given any quantum state , define the number x

to be the expected value of the position, and

to be the expected value of the momentum. The quantities and are only nonzero to the extent that the position and momentum are uncertain, to the extent that the state contains some values of X and P that deviate from the mean. The expected value of the commutator

can only be nonzero if the deviations in X in the state times the deviations in P are large enough.

The size of the typical matrix elements can be estimated by summing the squares over the energy states :

and this is equal to the square of the deviation, matrix elements have a size approximately given by the deviation.

So, to produce the canonical commutation relations, the product of the deviations in any state has to be about .

This heuristic estimate can be made into a precise inequality using the Cauchy-Schwartz inequality, exactly as before. The inner product of the two vectors in parentheses:

is bounded above by the product of the lengths of each vector:

so, rigorously, for any state:

the real part of a matrix M is , so that the real part of the product of two Hermitian matrices is:

while the imaginary part is

The magnitude of is bigger than the magnitude of its imaginary part, which is the expected value of the imaginary part of the matrix:

Note that the uncertainty product is for the same reason bounded below by the expected value of the anticommutator, which adds a term to the uncertainty relation. The extra term is not as useful for the uncertainty of position and momentum, because it has zero expected value in a gaussian wavepacket, like the ground state of a harmonic oscillator. The anticommutator term is useful for bounding the uncertainty of spin operators though.

Wave mechanics[editar | editar código-fonte]

Predefinição:Also In Schrödinger's wave mechanics, the quantum mechanical wavefunction contains information about both the position and the momentum of the particle. The position of the particle is where the wave is concentrated, while the momentum is the typical wavelength.

The wavelength of a localized wave cannot be determined very well. If the wave extends over a region of size L and the wavelength is approximately , the number of cycles in the region is approximately . The inverse of the wavelength can be changed by about without changing the number of cycles in the region by a full unit, and this is approximately the uncertainty in the inverse of the wavelength,